1 Specifying the range to apply the optimizer

Specify the boundaries in the Effective Range’s from and to boxes in units of iterations or epochs.

For example, to apply the optimizer between 50 epoch to 100 epoch, enter 50 in the from box and 100 in the to box. To apply the optimizer over the entire range from 1 epoch to the maximum epoch, leave the from and to boxes blank.

2 Specifying the name of the network used for optimization

Set Network to the name of the network created on the edit tab.

3 Specifying the name of the dataset used for optimization

Set data to the name of the dataset loaded on the dataset tab. To use several datasets simultaneously with the optimizer, specify the dataset names by separating each name with a comma.

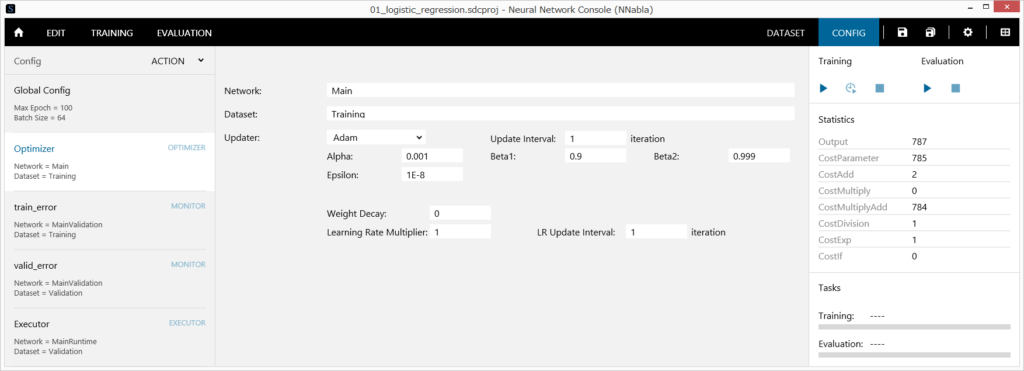

4 Specifying the parameter update method

- From the Config list, select Optimizer.

- Select an updater from the following (Adam by default).

| Updater | Update expression |

| Adadelta | $$g_t \leftarrow \Delta w_t\\ v_t \leftarrow – \frac{RMS \left[ v_t \right]_{t-1}}{RMS \left[ g \right]_t}g_t\\ w_{t+1} \leftarrow w_t + \eta v_t$$ Matthew D. Zeiler ADADELTA: An Adaptive Learning Rate Method |

| Adagrad | $$g_t \leftarrow \Delta w_t\\ G_t \leftarrow G_{t-1} + g_t^2\\ w_{t+1} \leftarrow w_t – \frac{\eta}{\sqrt{G_t} + \epsilon} g_t$$ John Duchi, Elad Hazan and Yoram Singer Adaptive Subgradient Methods for Online Learning and Stochastic Optimization |

| Adam | $$m_t \leftarrow \beta_1 m_{t-1} + (1 – \beta_1) g_t\\ v_t \leftarrow \beta_2 v_{t-1} + (1 – \beta_2) g_t^2\\ w_{t+1} \leftarrow w_t – \alpha \frac{\sqrt{1 – \beta_2^t}}{1 – \beta_1^t} \frac{m_t}{\sqrt{v_t} + \epsilon}$$ Kingma and Ba Adam: A Method for Stochastic Optimization. |

| Adamax | $$m_t \leftarrow \beta_1 m_{t-1} + (1 – \beta_1) g_t\\ v_t \leftarrow \max\left(\beta_2 v_{t-1}, |g_t|\right)\\ w_{t+1} \leftarrow w_t – \alpha \frac{\sqrt{1 – \beta_2^t}}{1 – \beta_1^t} \frac{m_t}{v_t + \epsilon}$$ Kingma and Ba Adam: A Method for Stochastic Optimization. |

| AMSGRAD | $$m_t=\beta_1m_{t-1}+\left(1-\beta_1\right)g_t\\ v_t=\beta_2v_{t-1}+\left(1-\beta_2\right){g_t}^2\\ {\hat{v}}_t=max\left({\hat{v}}_{t-1},\ v_t\right)\\ \theta_{t+1}=\theta_t-\alpha\frac{m_t}{\sqrt{{\hat{v}}_t}+\varepsilon}$$ Reddi et al. On the convergence of ADAM and beyond. |

| Momentum | $$v_t \leftarrow \gamma v_{t-1} + \eta \Delta w_t\\ w_{t+1} \leftarrow w_t – v_t$$ Ning Qian On the momentum term in gradient descent learning algorithms |

| Nag | $$v_t \leftarrow \gamma v_{t-1} – \eta \Delta w_t\\ w_{t+1} \leftarrow w_t – \gamma v_{t-1} + \left(1 + \gamma \right) v_t$$ Yurii Nesterov A method for unconstrained convex minimization problem with the rate of convergence o(1/k2) |

| RMSprop | $$g_t \leftarrow \Delta w_t\\ v_t \leftarrow \gamma v_{t-1} + \left(1 – \gamma \right) g_t^2\\ w_{t+1} \leftarrow w_t – \eta \frac{g_t}{\sqrt{v_t} + \epsilon}$$ Geoff Hinton Lecture 6a : Overview of mini-batch gradient descent http://www.cs.toronto.edu/~tijmen/csc321/slides/lecture_slides_lec6.pdf |

| Sgd | $$w_{t+1} \leftarrow w_t – \eta \Delta w_t$$ |

θ: Parameter to be updated

g: Gradient

η, α: Learning Rate, Alpha (learning rate)

γ, β1, β2: Momentum, or Decay, Beta1, Beta2 (Decay parameters)

ε: Epsilon (a small value used to prevent division by zero)

5 Setting the Weight Decay (L2 regularization) strength

Specify the weight decay coefficient in Weight Decay.

6 Specifying the learning rate decay mode

- From the Config list, select Optimizer.

- Select an LR Scheduler (Learning Rate Scheduler) for the updater from the following (Exponential by default).

- To perform Linear Warmup, select the Warmup check box, and specify the Warmup length in iterations or epochs.

| LR Scheduler | Description |

| Cosine | The learning rate is decayed according to the following formula. $$\eta_t=\frac{\eta_0}{2}\left(1+cos\left(\frac{t}{T}\pi\right)\right)$$ t: Parameter update count up to the present |

| Exponential | The learning rate is decayed using the exponential function. Specify the rate to decay the learning rate in Learning Rate Multiplier. Specify the interval for decaying the learning rate in unit of mini-batches in LR Update Interval. |

Polynomial | The learning rate is decayed using the following polynomial. $$\eta_t=\eta_0\left(1-\left(\frac{t}{T}\right)^p\right)$$ Specify the rate to use in p in Power. |

Step | The learning rate is decayed by the specified multiplying factor every specified iteration or every epoch. Specify the rate to decay the learning rate in Learning Rate Multiplier. Specify the timing for decaying the learning rate in LR Update Steps using comma-separated values. |

7 Updating parameters once every several mini-batches

Specify the parameter update interval in Update Interval. For example, to calculate four gradients using mini-batches containing 64 data samples and update the parameters using these gradients every four mini-batches, set Batch Size to 64 and Update Interval to 4.

Notes

To perform optimization using multiple training networks, Update Interval must be set to 1.

8 Adding a new optimizer

Click the hamburger menu (≡) or right-click the Config list to open a shortcut menu, and click Add Optimizer.

9 Renaming an optimizer

- Click the hamburger menu (≡) or right-click the Config list to open a shortcut menu, and click Rename.

Or, on the Config list, double-click the optimizer you want to rename. - Type the new name, and press Enter.

10 Deleting an optimizer

- From the Config list, select the optimizer you want to delete.

- Click the hamburger menu (≡) or right-click the Config list to open a shortcut menu, and click Delete.

Or, press Delete on the keyboard.

11 Rearranging optimizers

- From the Config list, select the optimizer you want to rearrange.

- Click the hamburger menu or right-click the Config to open a shortcut menu, and click Move Up or Move Down.