We have updated Neural Network Console Windows. We would like to introduce new functionalities and their usages in this post.

・Support Exporting to ONNX and NNB files

・Support Importing from ONNX and NNP files

・Support Mixed-Precision Training

・Other functionalities and improvements

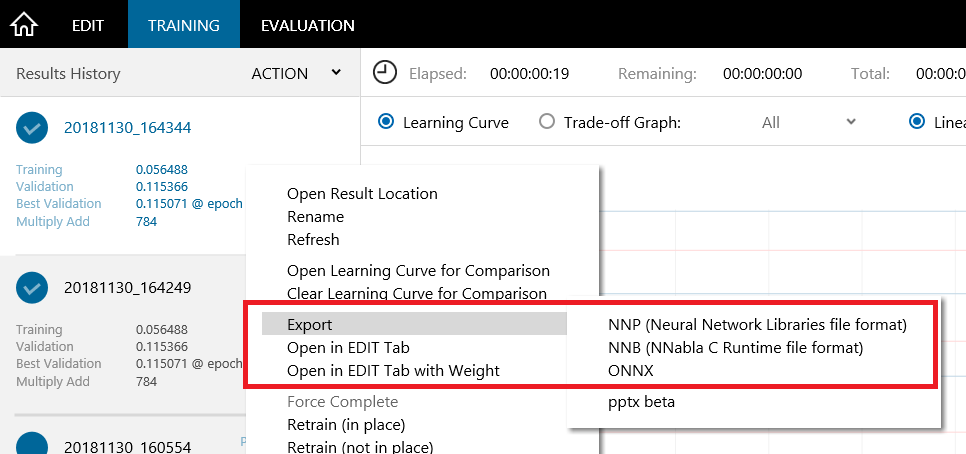

1. Support Exporting to ONNX and NNB files

Cloud version has already been able to export to and download as ONNX, NNP, NNB files, and now Windows version is also able to export to these formats.

Setting export format can be done by right-button clicking on training results and executing Export from the menu.

ONNX is an open file format for deep learning models, and a large number of deep learning frameworks/libraries released from various companies are compatible with it.

ONNX

https://onnx.ai/

By exporting to ONNX file format, it is now possible to reuse the model trained on Neural Network Console Windows in other deep learning frameworks, or to implement high-speed inference by using optimized inference environments from chip vendors.

NNP is a file format Neural Network Libraries, an open-source deep learning framework available on GitHub that can be used with Python or C++.

Neural Network Libraries

https://nnabla.org/

Neural Network Libraries (GitHub)

https://github.com/sony/nnabla

NNB is a file format for NNabla C Runtime, a reference C library for inference written almost entirely in pure C.

NNabla C Runtime

https://github.com/sony/nnabla-c-runtime

By enabling export to NNB, models trained with Neural Network Console Windows can be implemented on various embedded devices supporting C language, including SPRESENSE.

SPRESENSE

https://developer.sony.com/develop/spresense/

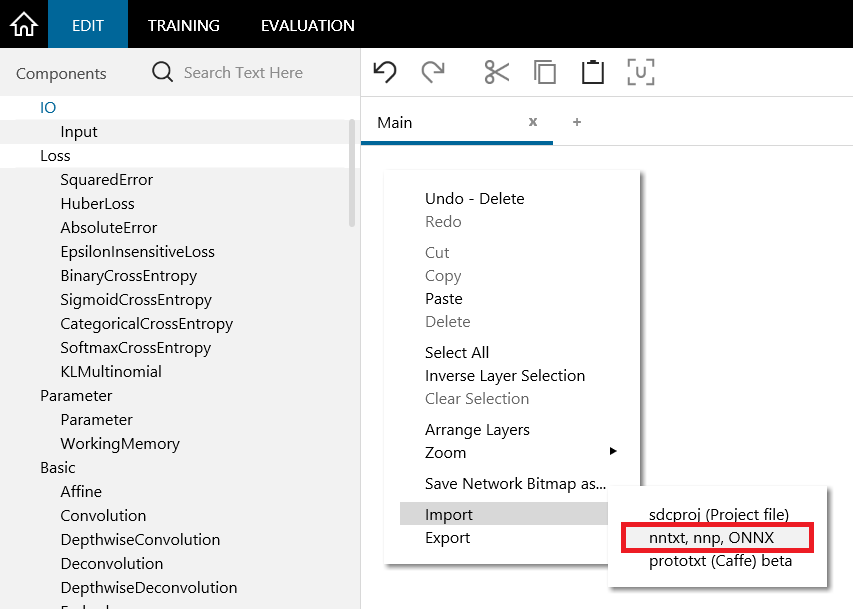

2. Support Importing from ONNX and NNP files

Users can now load ONNX and NNP files on GUI with learned coefficients, and reuse them. Import setting can be performed by right-button clicking EDIT tab and executing Import from the menu.

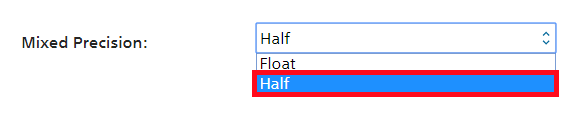

3. Mixed-Precision Training

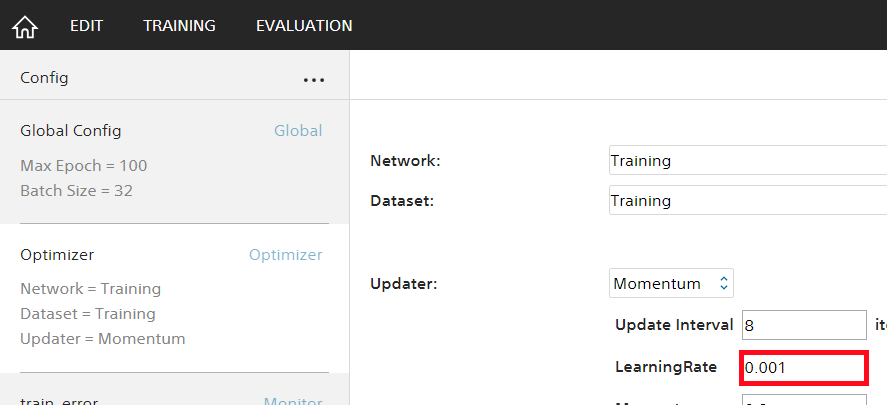

NVIDIA GPUs from Pascal and later generations support computations with 16-bit (half precision, fp 16, half format) floating points. With these GPUs, users can implement mixed-precision training by simply converting Precision item from Float to Half on Global Config of the project.

Mixed-Precision training has the following merits:

・Since the memory size for parameters and the buffer can be suppressed by half, larger neural networks can be trained within limited GPU memory.

・When training with maximum GPU memory, batch size can be doubled, improving computational efficiency (training speed). Furthermore, training speed can be improved with Tensor Core available on GPUs from Volta and later generations

While mixed-precision training can potentially lead to performance degradation due to less precise computation, it has been shown that using loss scaling can avoid such problem in most cases.

http://docs.nvidia.com/deeplearning/sdk/mixed-precision-training/index.html

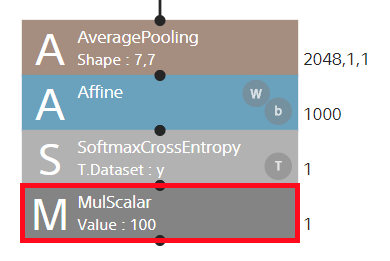

In Neural Network Console, loss scaling can be implemented easily via multiplying the loss by a scale by adding MulScalar layer after loss function, and setting learning rate as 1 / scalar.

※Mixed-precision training can fully exhibit its efficiency when the target neural network is a large one that takes up the maximum GPU memory. Note that, for small networks in most of the sample projects, or when the training data are too large compared to computational capacity (taking time to load the data), the effect of high-speed training may not be shown explicitly.

4. Other functionalities / improvements

We have also implemented following functionalities /improvements.

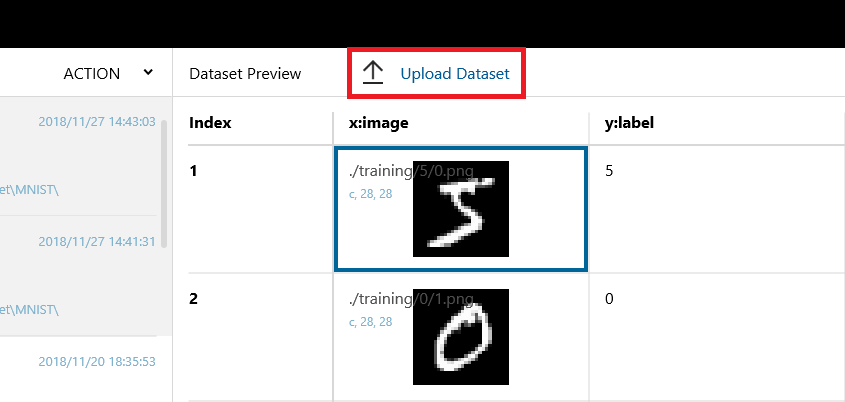

・Upload dataset to Neural Network Console Cloud.

Users can now directly upload the dataset to Neural Network Console Cloud from the dataset preview in dataset management in Neural Network Windows. By uploading the data easily, users can experience the rich amount of GPU resources available in Neural Network Console Cloud.

・Addition of new layers, optimizer, and learning rate scheduler New features in Neural Network Libraries, including 24 layers, optimizer (AMSGRAD), and 3 learning rate schedulers, have been added.

・New Sample Project

New sample projects, including simple semantic segmentation, Fashion-MNIST, CIFAR-10, CIFAR-100, have been added, where users can download the dataset and perform training/inference by simple commands.

samples\sample_project\tutorial\semantic_segmentation

samples\sample_project\image_recognition

We will continue to add new sample projects.

We will continue to improve Neural Network Console, and we are also looking forward to getting feedbacks from the users, so that we can make proper improvements quickly!

Neural Network Console Windows

https://dl.sony.com/app/