We have updated Neural Network Console Windows.

In this post, we will describe the following major updates.

-

・Training with Neural Network Console Cloud’s computational resources

・Export to TensorFlow format (.pb) (beta)

・Addition of LIME, Inference plugins

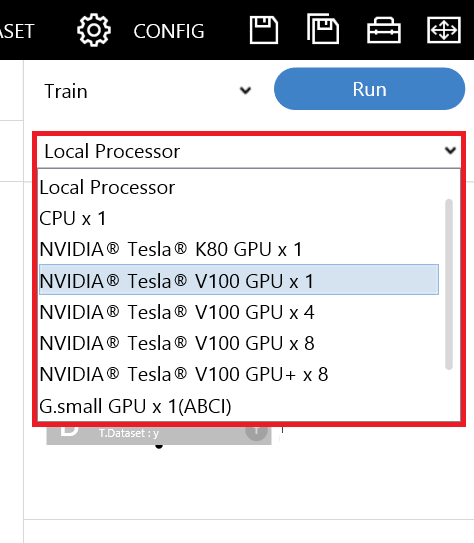

1. Training with Neural Network Console Cloud’s computational resources Before we execute training

We can now select Neural Network Console Cloud’s training resources from the menu (※1).

By executing training with cloud version’s computational resources, the project and the dataset are automatically uploaded to Neural Network Console Cloud.

Once the training begins, we can check the progress of training in the same way we do when training with local processors.

We can also check the progress of training on Neural Network Console Cloud as well.

Also, when training with cloud version’s resources, it is possible to run multiple trainings in parallel.

For example, running 4 trainings in parallel with 8 GPUs for each, we can use 32 GPUs simultaneously.

For details about training with Neural Network Console Cloud’s resources, please refer to the following description from pdf manual included in Windows version.

6.1.9 Executing neural network training on the Neural Network Console Cloud version (beta)

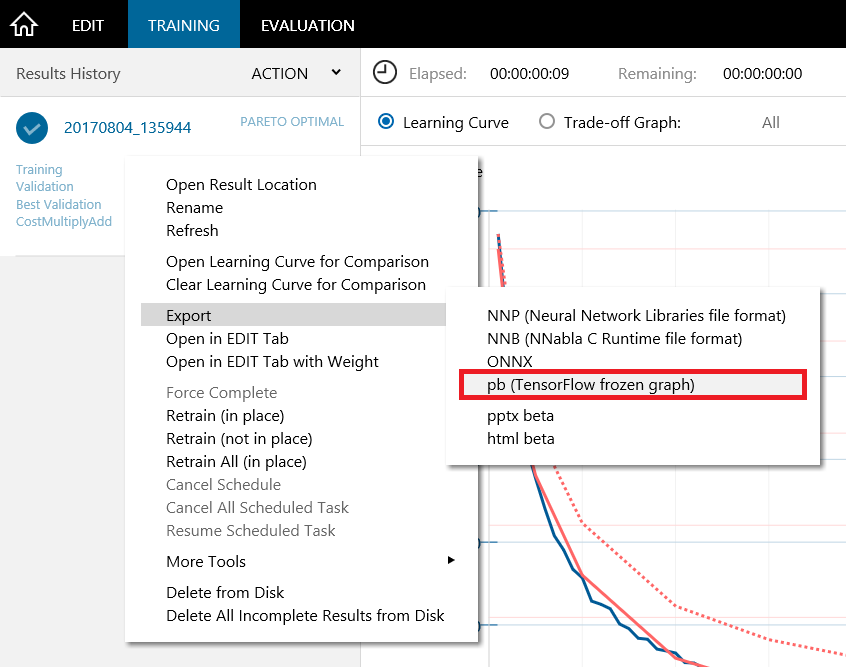

2. Export to TensorFlow format (.pb) (beta)

We can export the model trained with Neural Network Console to TensorFlow format via ONNX, which has already been compatible (※2).

To export to TensorFlow format, right-click on the list of training results and select Export –> pb (TensorFlow frozen graph) from the menu.

With this export functionality, models trained with Neural Network Console can now be executed in a wider range of environments.

3. Addition of LIME, Inference plugins

We introduce two of our newest plugins below.

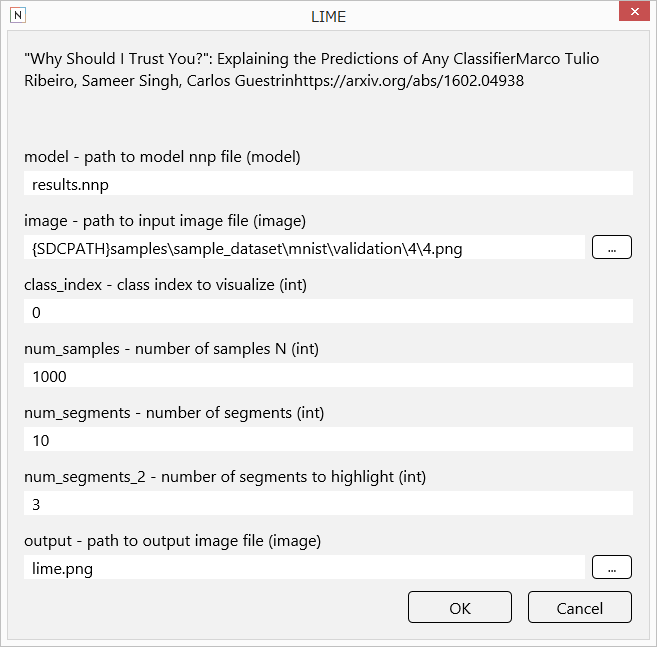

・LIME

LIME (※3)is a method to visualize which part of the input data exerts influence on the recognition results, as in our already available plugin Grad-CAM.

LIME can be used in the following way.

-

1. Execute training and evaluation on a visual recognition project.

2. Select the evaluation image displayed on EVALUATION tab.

3. Right-click on the evaluation result on EVALUATION tab, and select plugin –> LIME.

4. Insert the index of the class to visualize in class_index (0~999 in case of 1000-class classification, etc.)

To enlarge the resulting image from LIME, double-click on LIME’s resulting image displayed on EVALUATION tab.

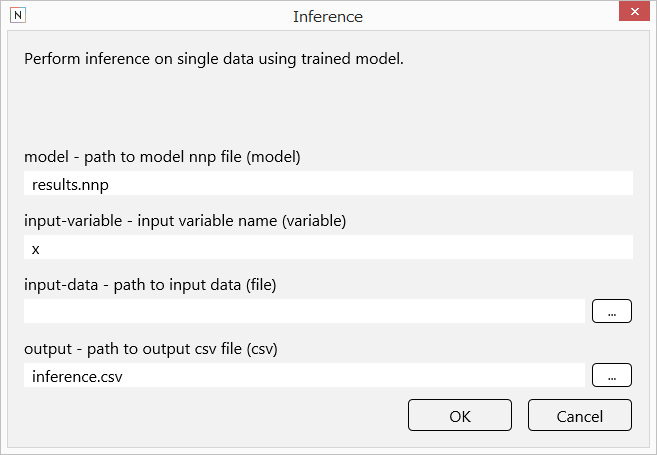

・Inference

This executes inference on single data.

Using this plugin, we can now easily run inference on new data on GUI.

Inference can be run as following:

-

1. From the list of training results on the left of EVALUATION tab, select the one by the model to be used for inference.

2. Right-click on the evaluations results on EVALUATION tab, and select Plugin –> Inference.

3. Click on the button next to input-data, and select the file (image or .csv) to run for recognition. Or, in case of vector data, input the values separated by commas.

We will continue to improve Neural Network Console.

We are also looking forward to getting requests from the users for further addition of functionalities!

Neural Network Console Windows

https://dl.sony.com/ja/app/

※1

As of its release date, projects using RandomFlip layer cannot be executed properly on Neural Network Console Cloud. This issue will be handled with the updates on the cloud version.

※2

On Neural Network Console Windows version 1.60, there may be cases where export to TensorFlow format (.pb) is not properly completed. We will handle this issue in near future.

※3

“Why Should I Trust You?”: Explaining the Predictions of Any Classifier

Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin

https://arxiv.org/abs/1602.04938