We have updated Neural Network Console Windows today. This post will introduce the major updates.

・Direct compatibility for Wav files

・Refinements for more convenient trial-and-error

・New plugins, layers, solvers

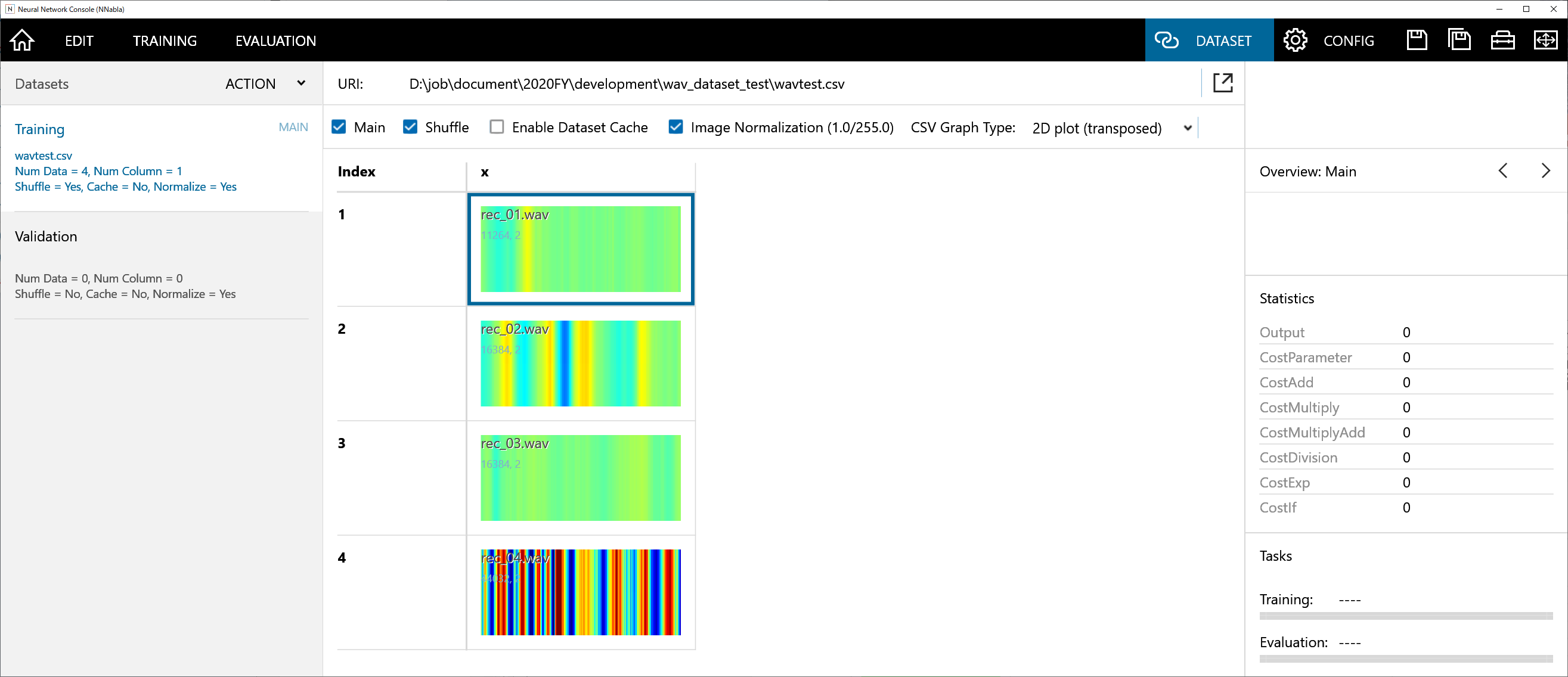

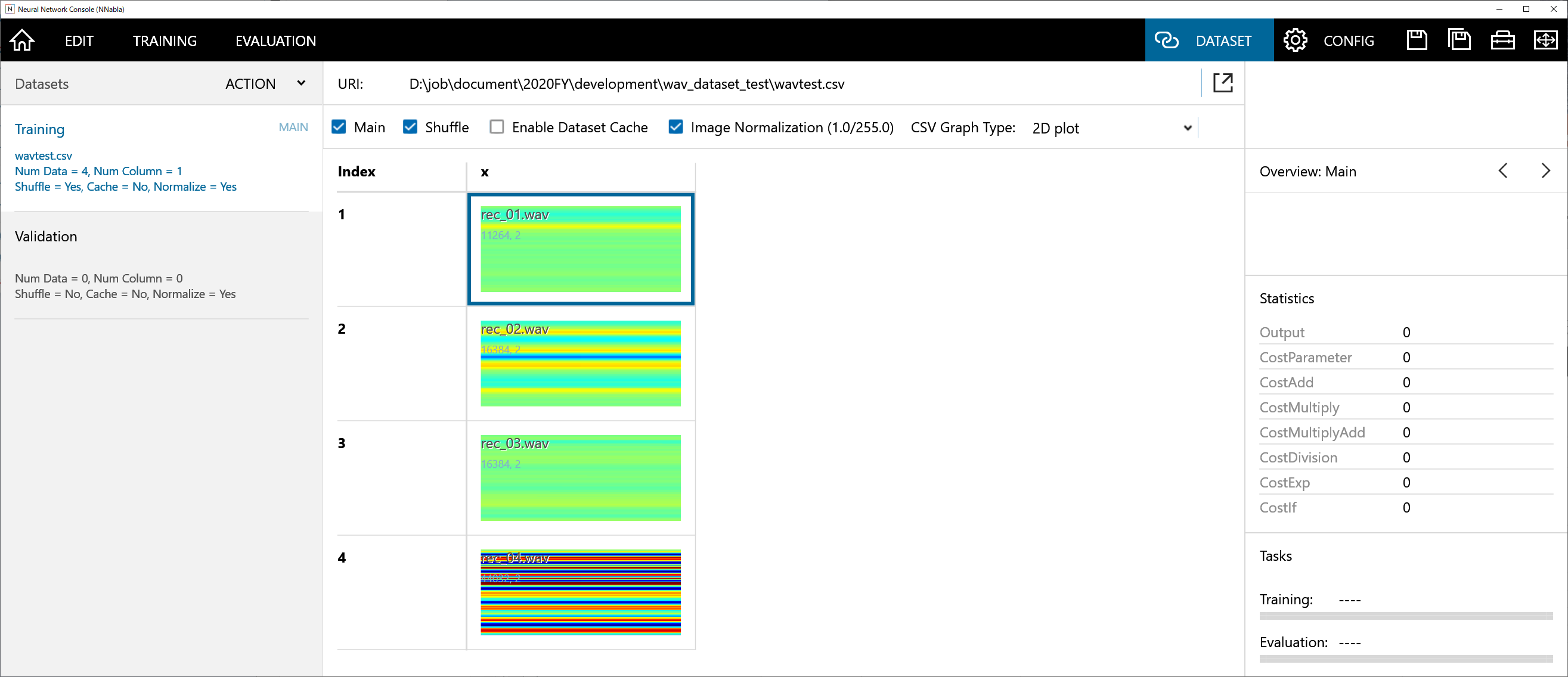

1. Direct compatibility for Wav files

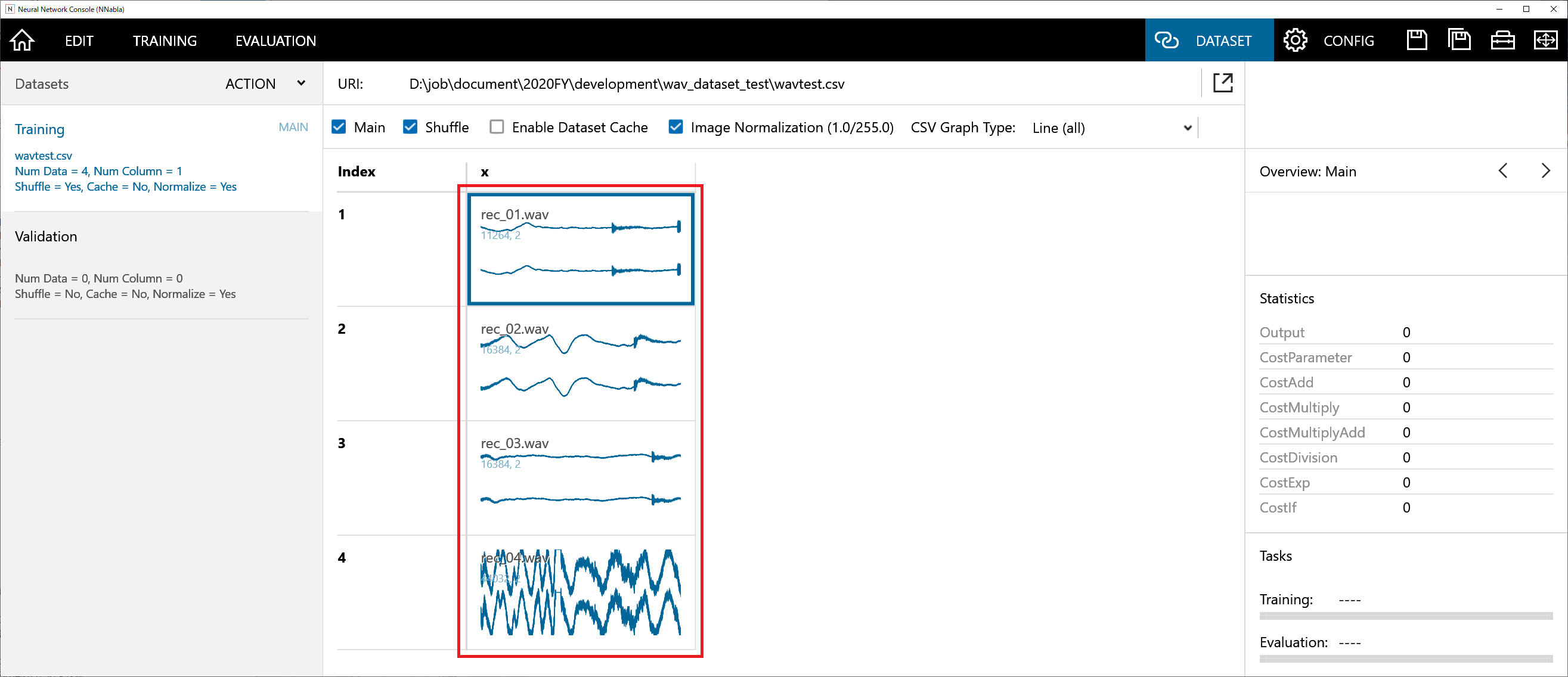

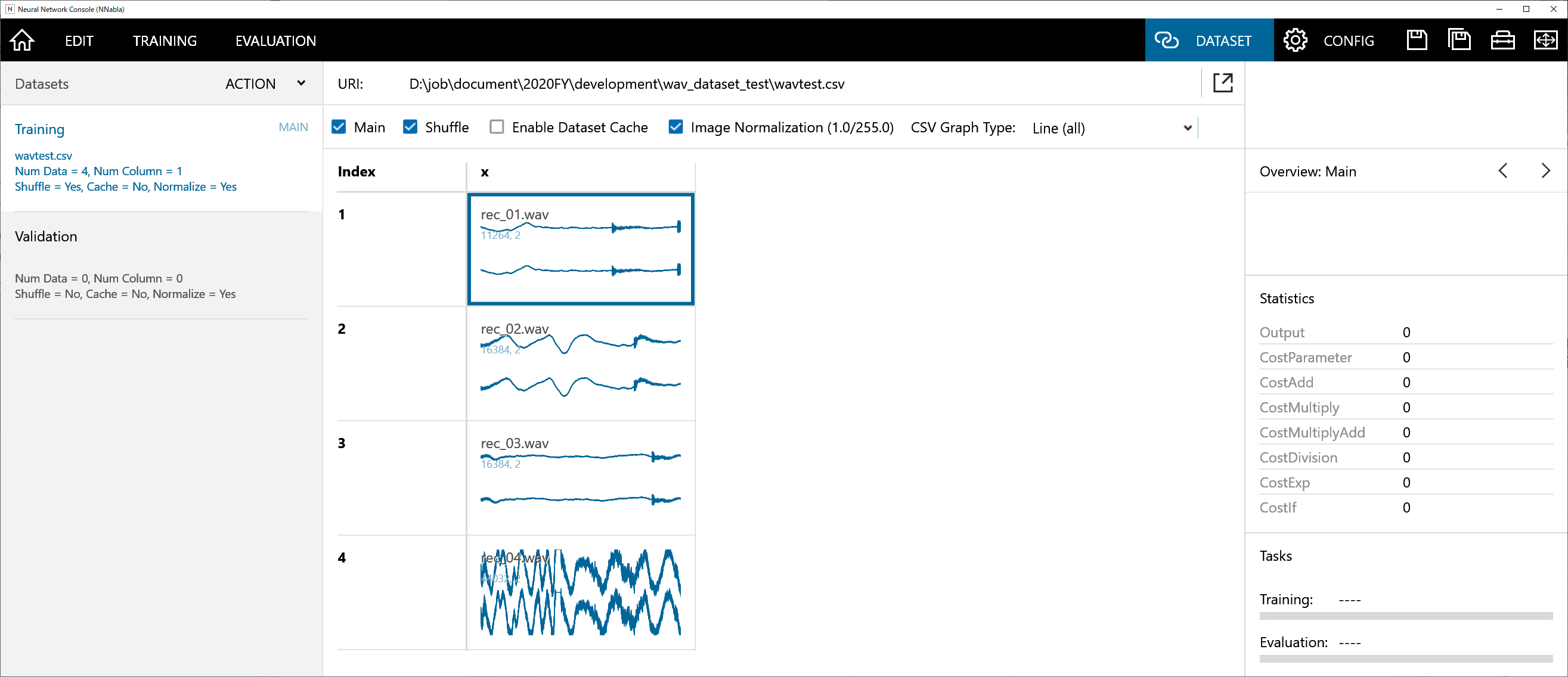

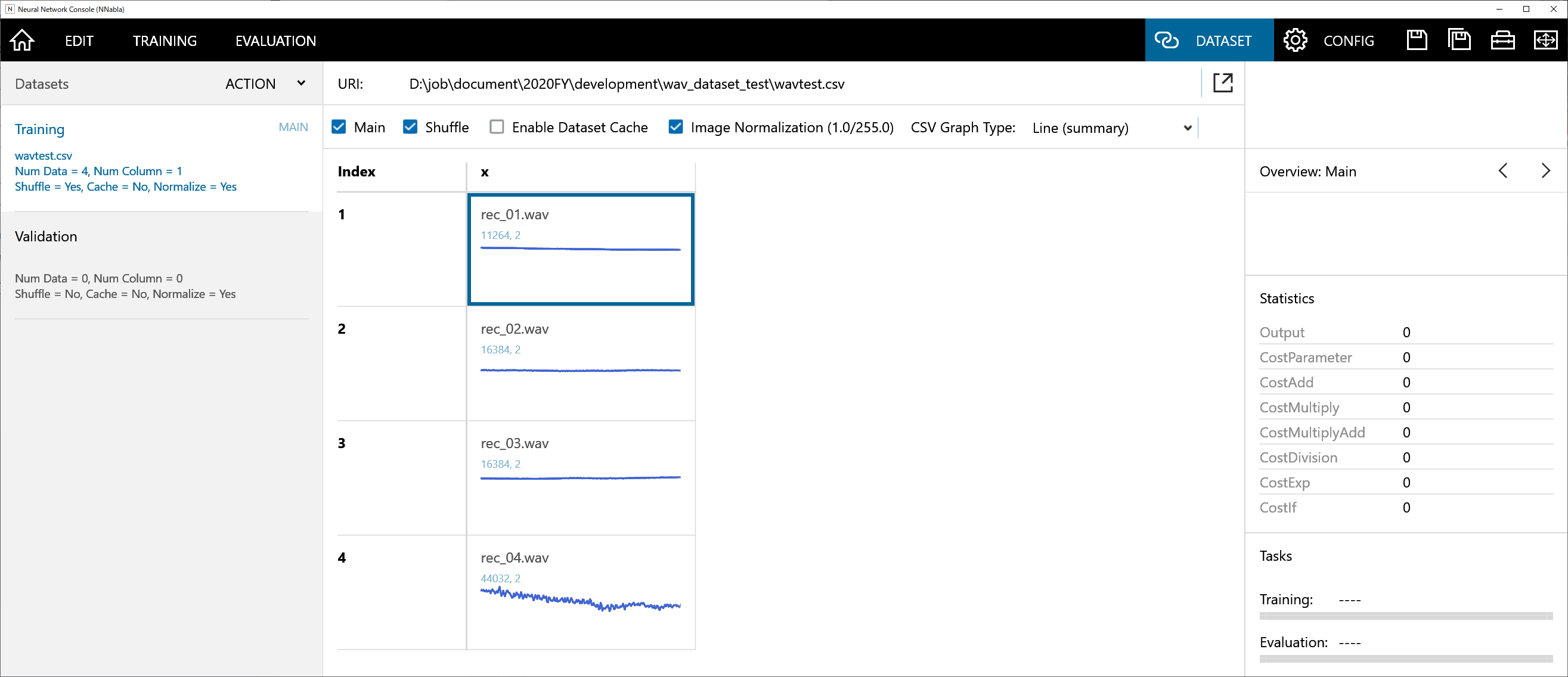

Previously, you had to convert wav files to csv files to make it compatible, but now you can treat it in the same way as image files and load it simply by adding a cell for the wav files in your dataset csv file.

In Neural Network Console, Wav file specified in the csv is loaded as the matrix consisting of the number of samples times the number of channels. Wav files loaded can be found as waveform shapes in DATASET tab.

We currently support 8bit or 16bit, Wav files of PCM format. With this update, experiments dealing with waveform such as speech can be implemented more easily.

2. Refinements for more convenient trial-and-error

We have reflected feedback from the users to implement various functional refinements.

Search and Replacement of layers

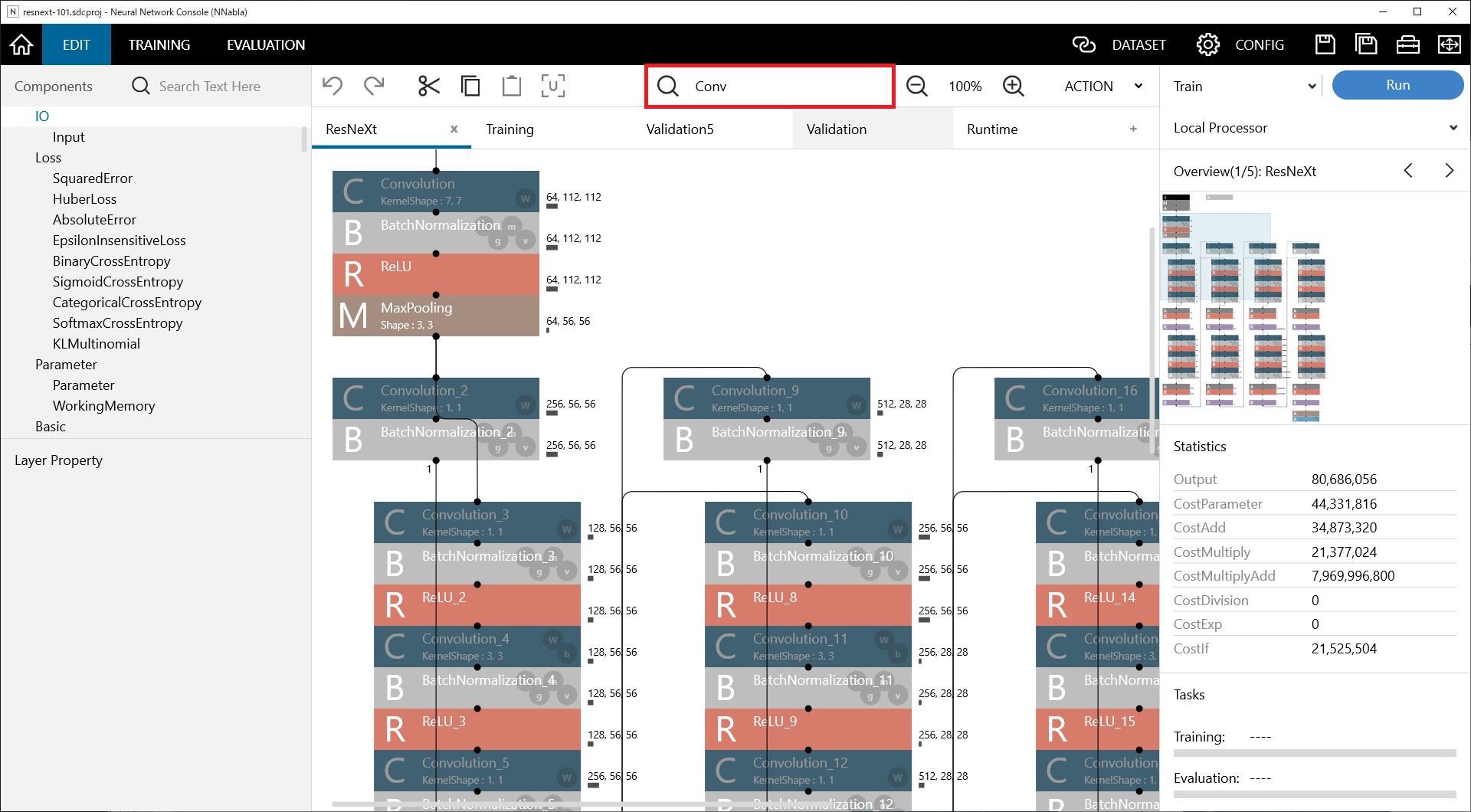

Layers can be searched by typing the kind and name of the layer in the search box at the upper part of EDIT tab.

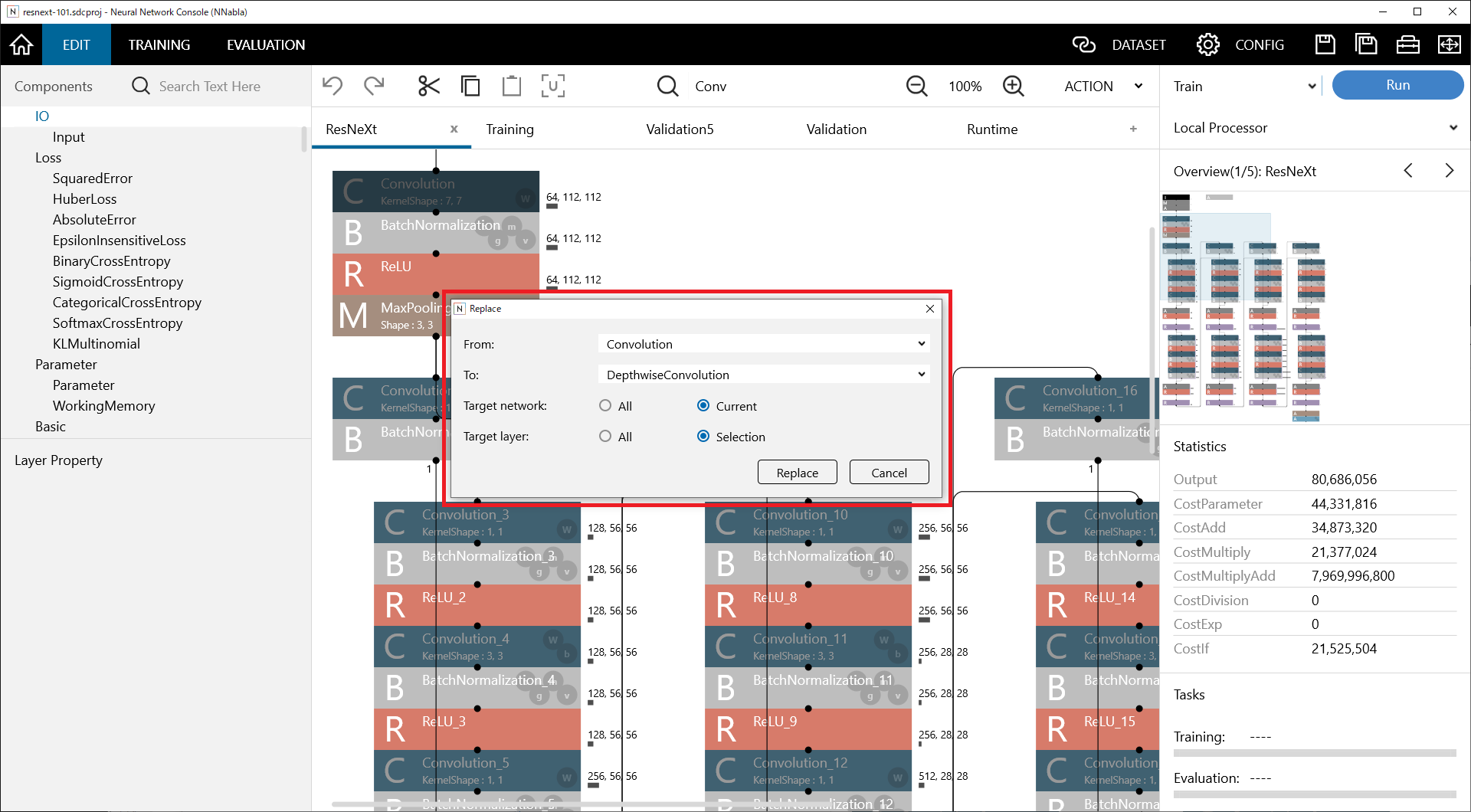

Also, from Replace at right-click menu, you can perform replacement of certain type of layers with other type of layers. Replacement can be performed over one of the following three ranges; the entire network, the network currently in display, or within user-specified range.

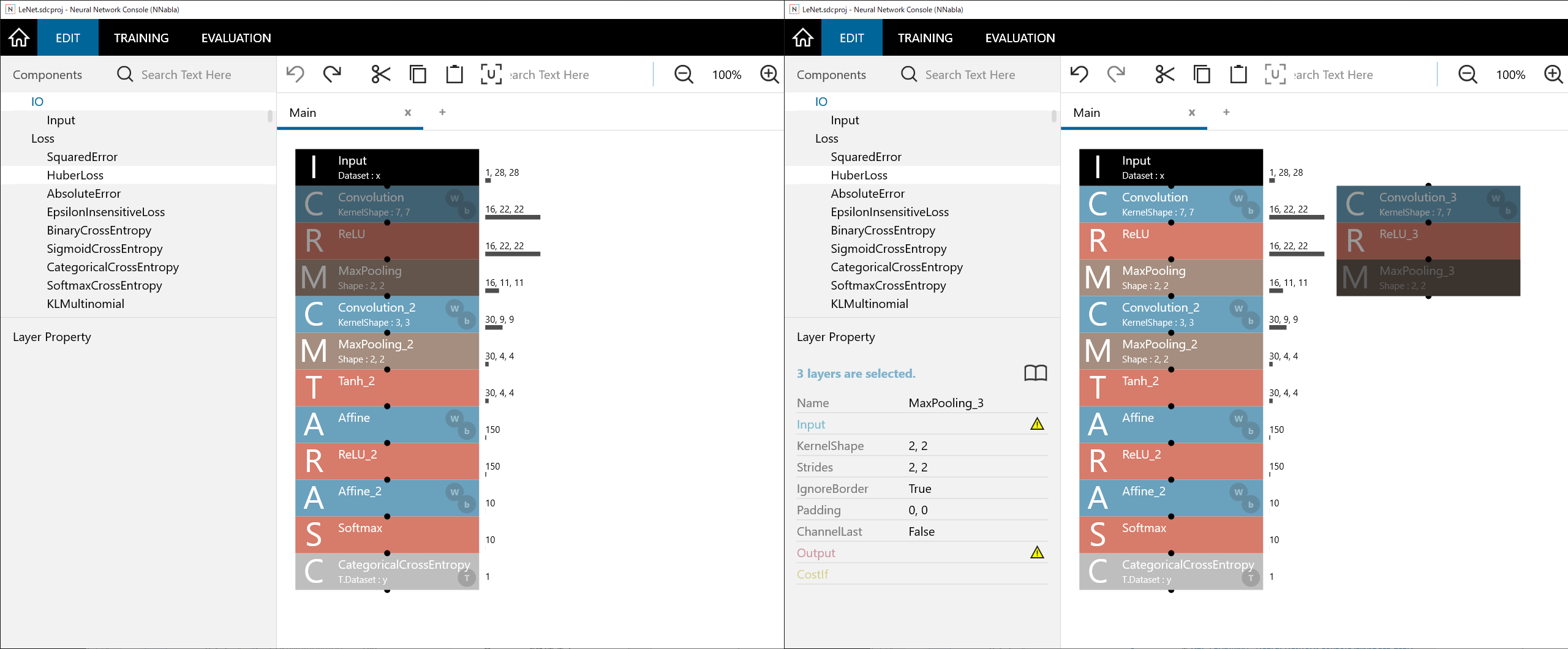

Duplication of layers

With shortcut Ctrl+D or Alt+drag, layers currently selected can be duplicated.

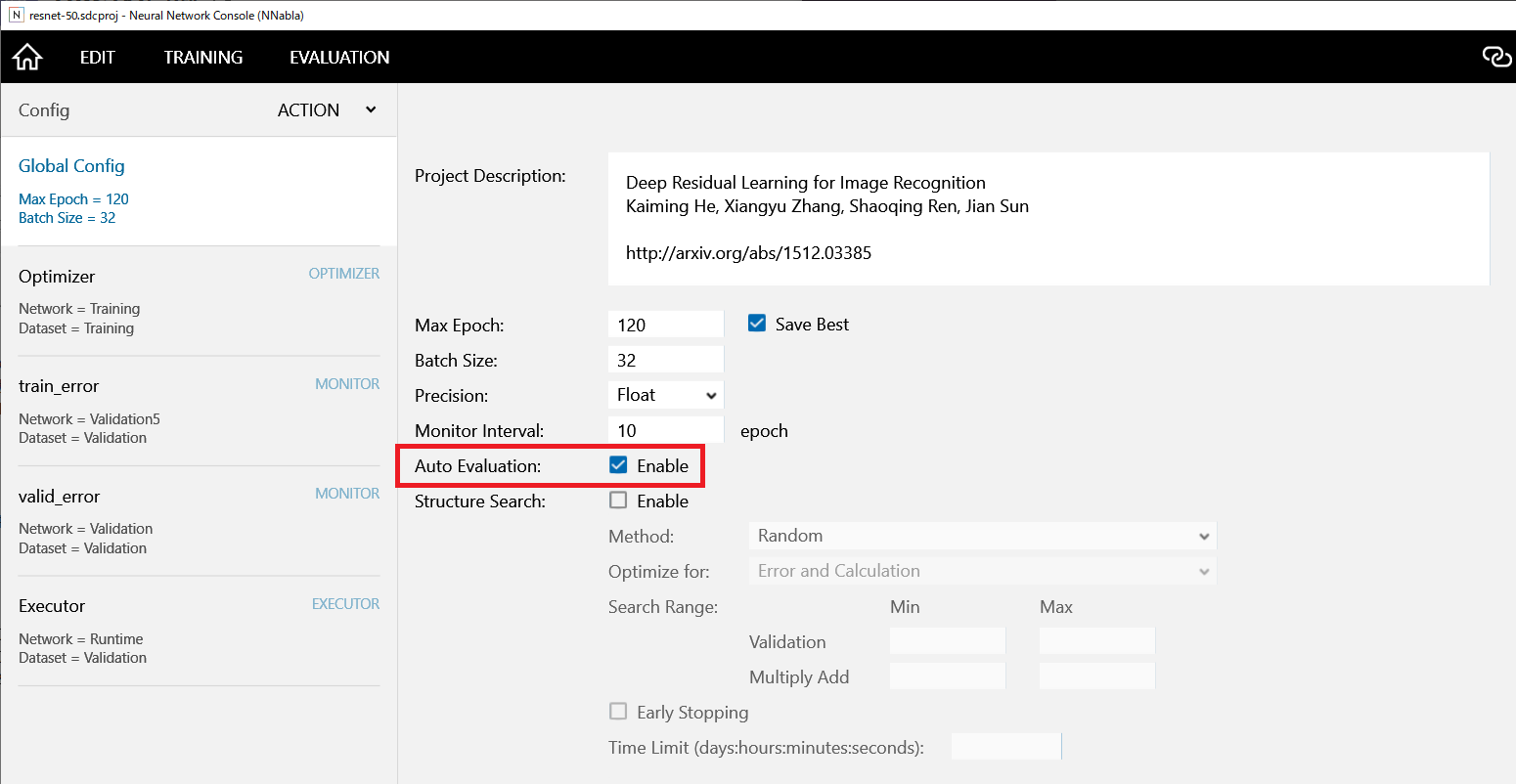

Automatic Evaluation

By checking Global Config and Auto Evaluation at CONFIG tab, you can execute evaluation immediately after training. It is no longer necessary to evaluate the models one by one, when you need to evaluate all models after training.

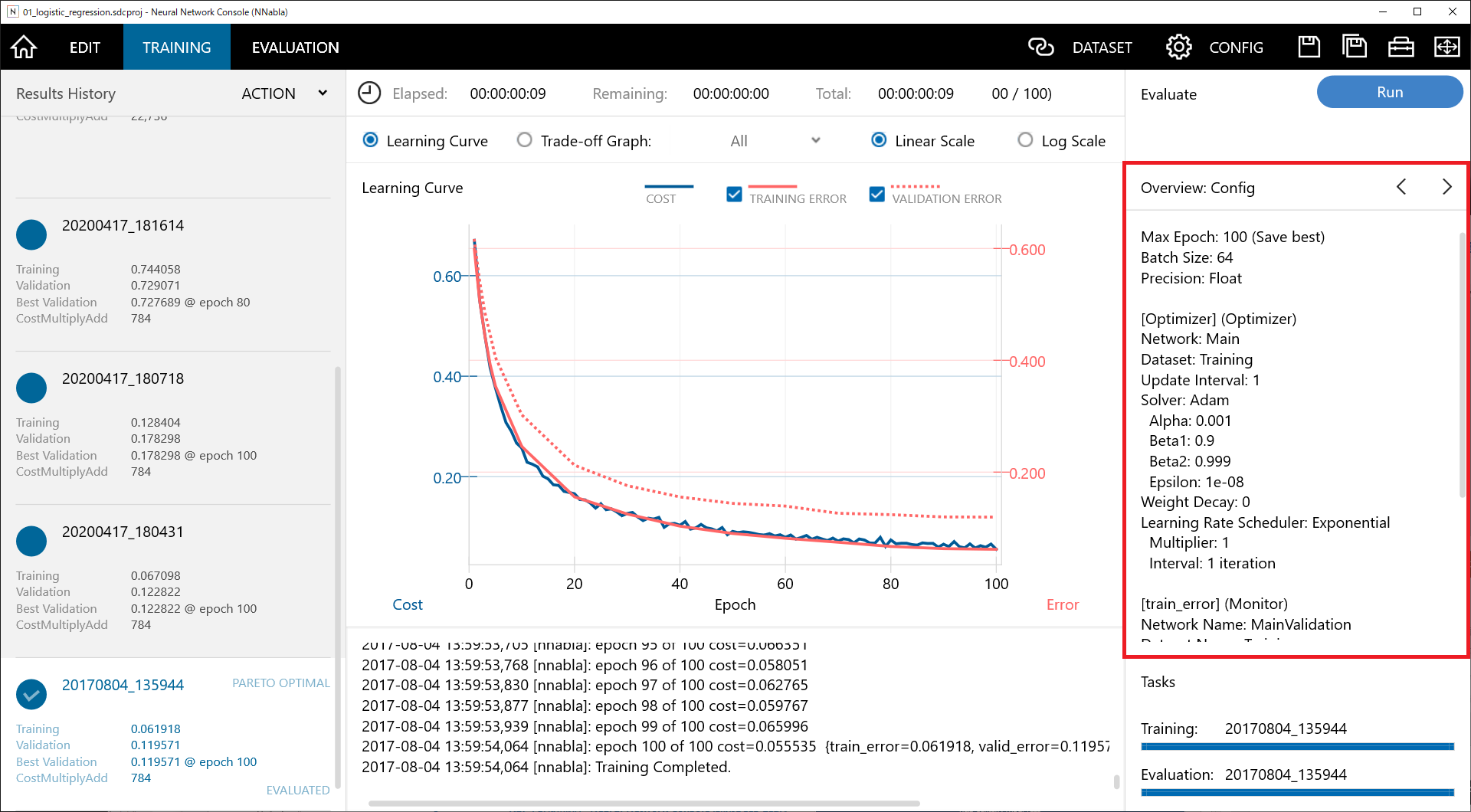

Checking Training Configuration

You can now easily check the configuration items (Batch Size, Updater, etc.) from Overview while tracking past configurations, in the same manner as network structure. To check the CONFIG of the training result, click the “<” button at the upper right of Overview and switch the contents of Overview to CONFIG.

3. New plugins, layers, solvers

Various Display Modes for CSV and wav

In addition to the waveform display for each column (up to the first 128 rows, 10 columns), new display types are supported including simplified display of all waveforms, heat map display, and its transpose.

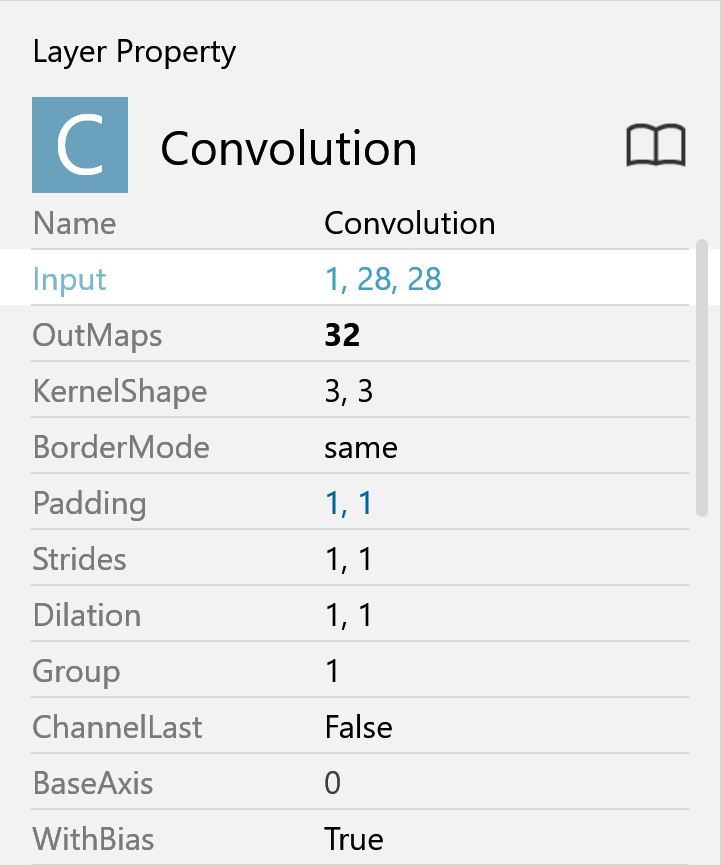

Visibility of Properties

Properties edited from the default are now in bold, and properties with formulas, etc. are in blue.

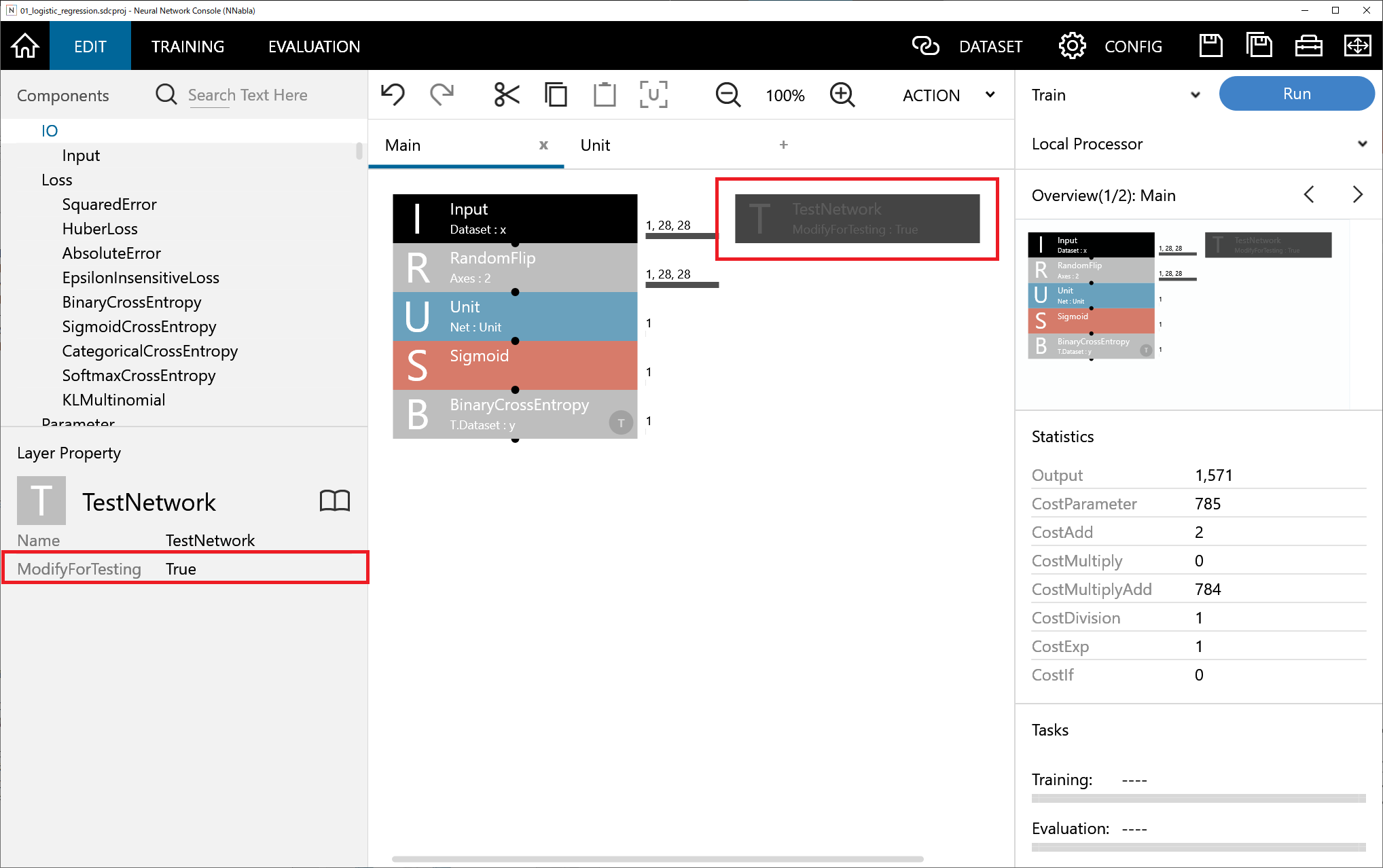

Easier Configuration of Inference Network

By simply appending the newly added TestNetwork layer in Setting category to your network, you can easily change the settings for test network, such as skipping the layers in the network where SkipAtTest is set to True, or setting the BatchStat property of BatchNormalization to False. (The change of setting is reflected upon the execution of training).

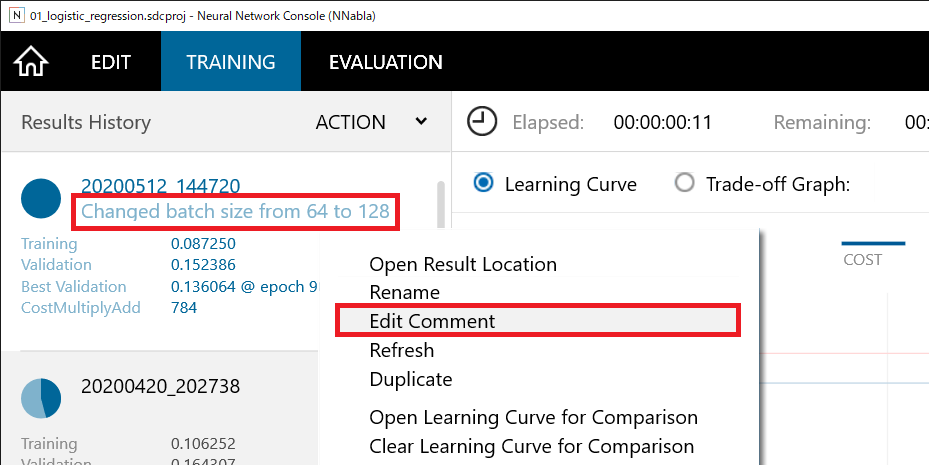

Comments on Training Results

You can now add a comment on the training result from Comment at the right-click menu of the training result. It can be useful for taking notes on what type of trial-and-error was performed, for example.

Various Layers and Solvers

AddN and MulN layers that perform addition and multiplication of three or more inputs, BatchInv layer that computes the inverse matrix, and IsInf, IsNaN, ResetInf, and ResetNaN layers that detect and reset NaN and Inf have been added. For solvers, AdamW, SgdW [1] that are advanced versions of Momentum Sgd and Adam have been added, along with Lars [2] designed for large-scale distributed training.

New Plugins

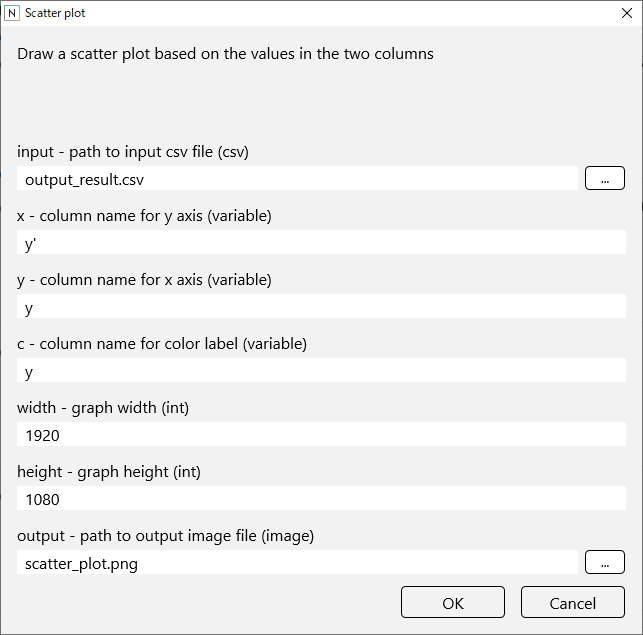

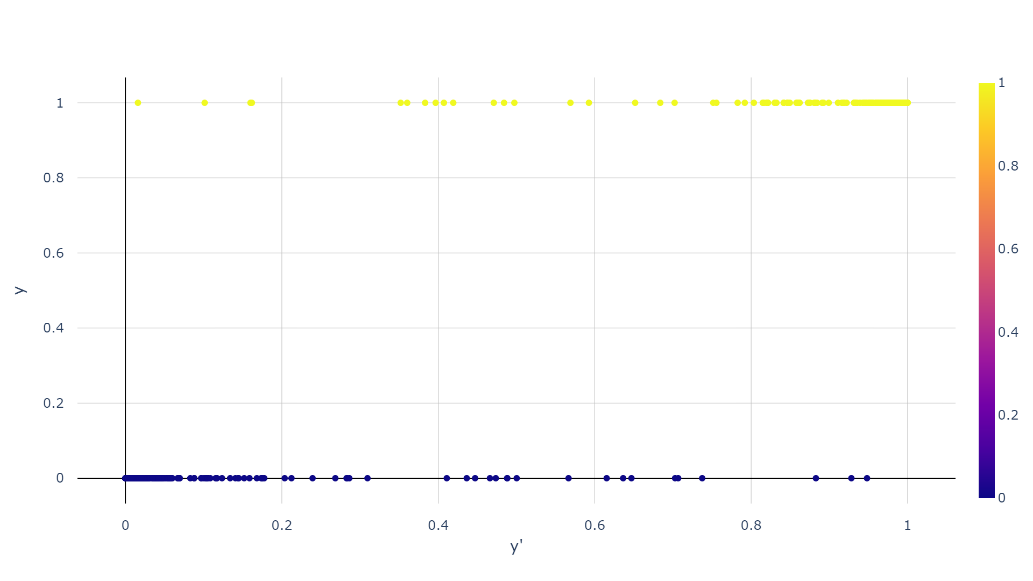

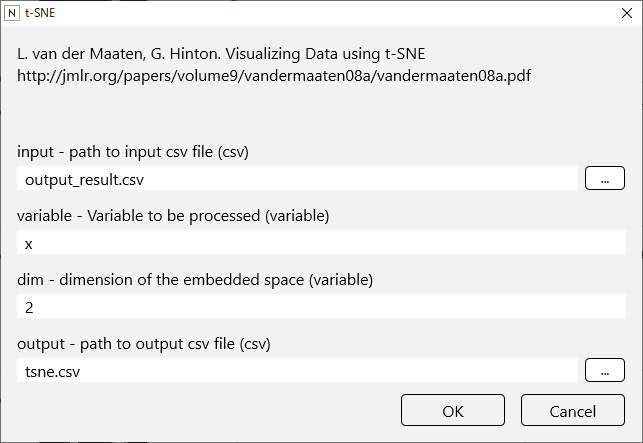

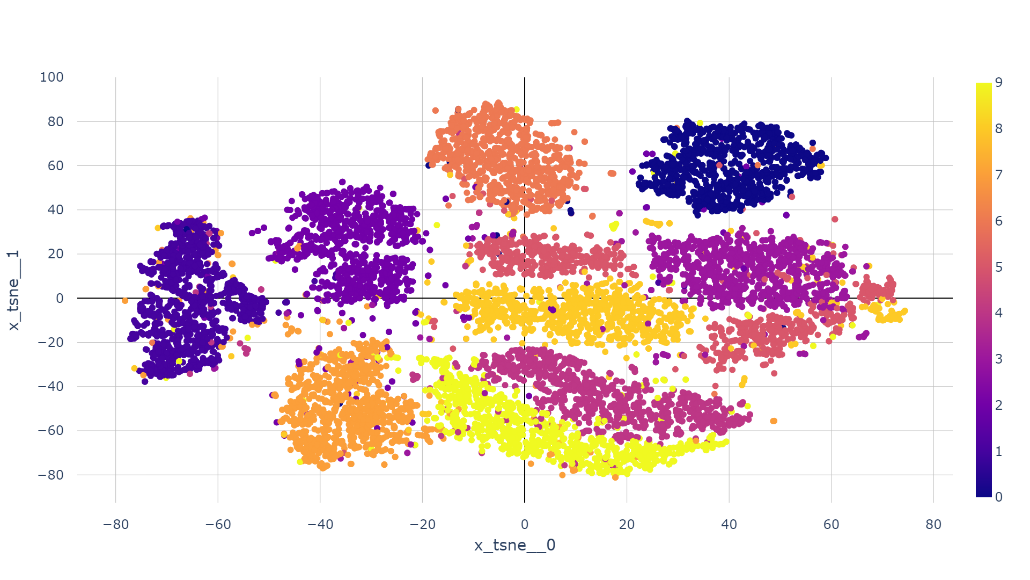

Scatter Plot plug-in that draws a scatter plot from two variables in the dataset CSV file, and t-SNE [3] plug-in that performs dimensionality reduction of high-dimensional data have been added.

We will continue to update Neural Network Console.

We look forward to getting feedbacks from the users for further improvements!

Neural Network Console Windows

https://dl.sony.com/ja/app/

[1]

Decoupled Weight Decay Regularization

Ilya Loshchilov, Frank Hutter

https://arxiv.org/abs/1711.05101

[2]

Large Batch Training of Convolutional Networks

Yang You, Igor Gitman, Boris Ginsburg

https://arxiv.org/abs/1708.03888

[3]

Visualizing Data using t-SNE

Laurens van der Maaten, Geoffrey Hinton

http://www.jmlr.org/papers/volume9/vandermaaten08a/vandermaaten08a.pdf