From version 1.20 of the Neural Network Console Windows version, it has become possible to use the unit function to concisely express complex neural networks, such as those with nested structures.

This tutorial describes how to use the unit function by taking the basic convolutional neural network LeNet as an example.

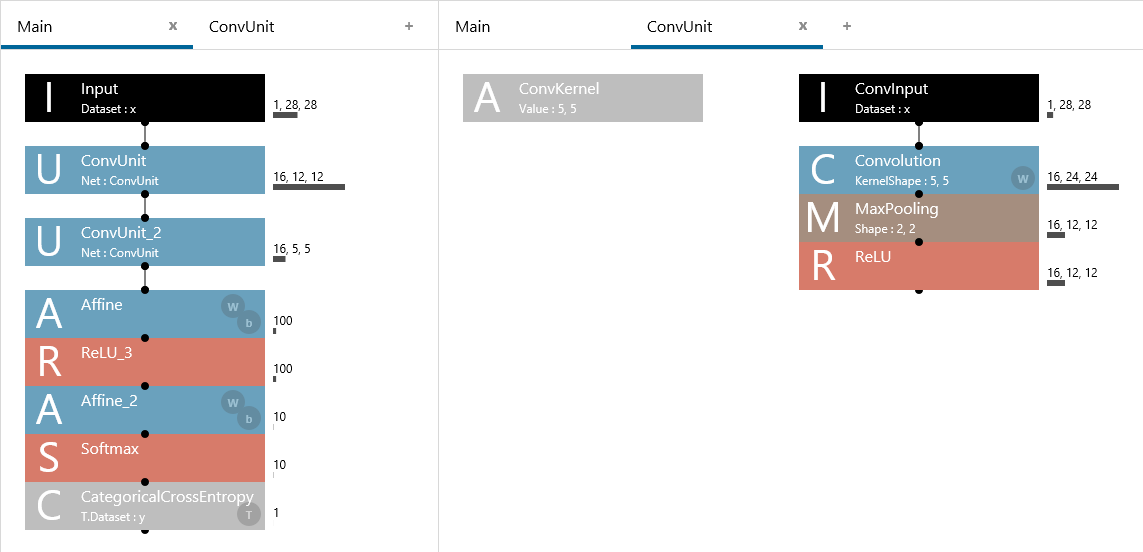

LeNet

1. What you can do with the unit function

In the LeNet shown above, the structure Convolution→MaxPooling→ReLU appears twice consecutively immediately after the Input layer.

If you do not use the unit function, you have to express Convolution→MaxPooling→ReLU twice as shown above. This is a hassle (even with the copy and paste function). In addition, if you want to change ReLU to ELU, for example, when you edit the network structure later, you have to replace ReLU with ELU twice, which is also a hassle.

By using the unit function to define Convolution→MaxPooling→ReLU as a single unit, you can reuse the network structure as shown below.

LeNet expression using the unit function

The ConvUnit process in the main network is expressed in the ConvUnit network.

When you use the unit function, the Convolution→MaxPooling→ReLU structure is expressed within the ConvUnit. Therefore, if you want to change ReLU to ELU, as described earlier, you only need to replace ReLU with ELU of the ConvUnit.

The unit function is analogous to subroutines and functions in programming. In programming, frequently used codes are defined as functions to make them reusable. Likewise, the unit function allows frequently used network structures to be defined as units to make them reusable.

Especially when you are designing a large complex network, the effects of using units become evident, allowing entire networks to be designed with less work, making entire networks easier to view (read), and providing high maintainability.

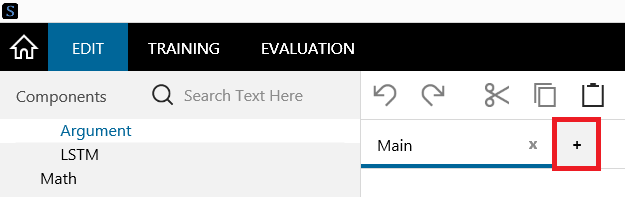

2. How to define units

Defining a unit is very simple. Click the + button at the top of the network graph on the EDIT tab to add a new network structure, and write the content of the unit in this structure.

Adding a new network structure

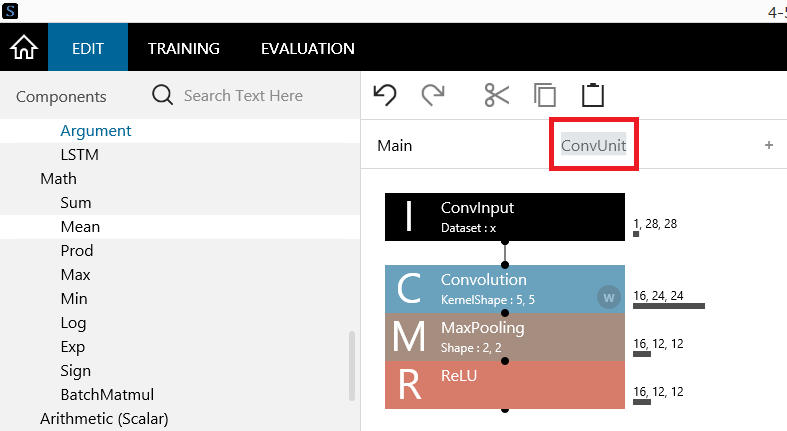

For example, to define the Convolution→MaxPooling→ReLU structure as a unit, connect the Convolution, MaxPooling, and ReLU layers to the Input layer. When you finish defining the unit, click the name area of the network structure, and change it to a unit name of your choice (“ConvUnit” in this example).

Defining a unit

3. Adding parameters to a unit

Just as you can provide parameters to functions in programming, you can also define for a unit parameters that can be specified from the caller of the unit. This section describes how to define parameters by using an example of adding a parameter that can specify KernelShape of Convolution in the ConvUnit that was defined earlier.

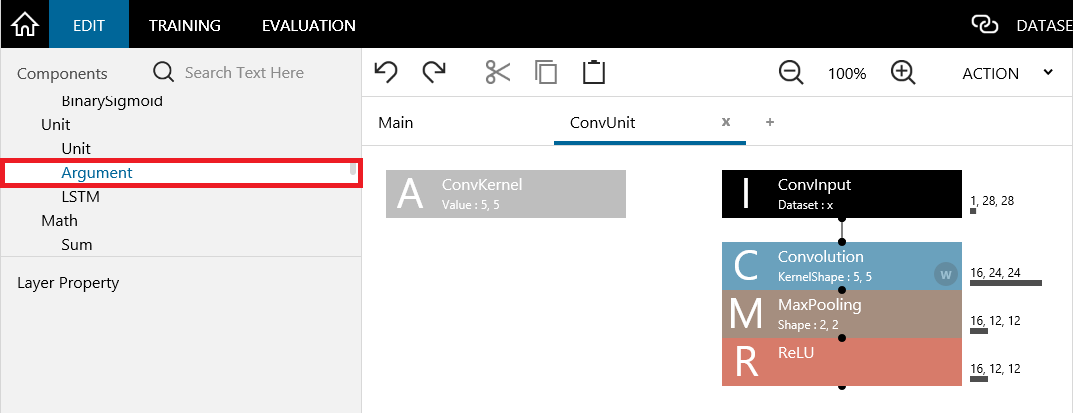

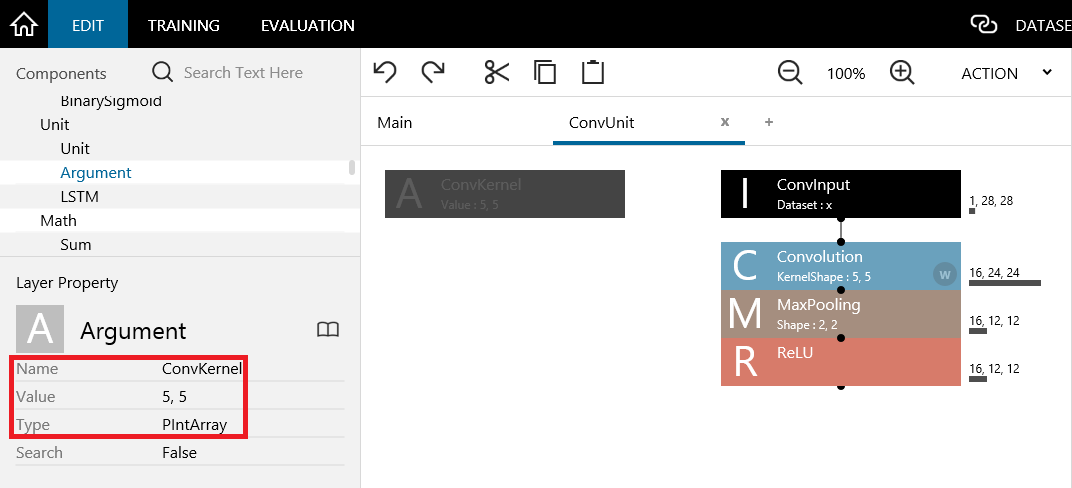

First, to add a parameter to the unit, add an Argument layer included in the Unit category to the unit.

Adding an Argument layer

After adding the Argument layer, edit the parameters of the Argument layer to set the name, type, and default value. In this example, you will select the Argument layer that was added, set the Name property to a property name of your choice (“ConvKernel” in this example), set the Type property to PIntArray (array of positive integers), and the Value property, which indicates the default value, to “5,5”.

Editing the Argument layer

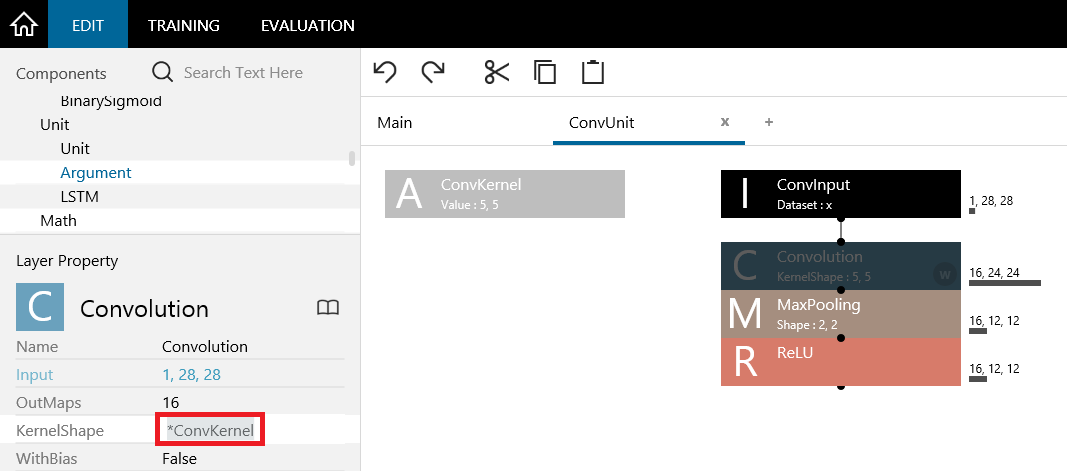

Finally, add a reference to the defined parameter in the layer that will use the parameter. You can assign a parameter by specifying an asterisk and the parameter name in any editable property of any layer in the unit. In this example, select the Convolution layer, and set the KernelShape property to “*ConvKernel”. As a result, the Convolution layer will use the value specified by the ConvKernel parameter for its KernelShape value.

Making the layer use the parameter defined in the Argument layer

4. Using the defined unit from other network structures

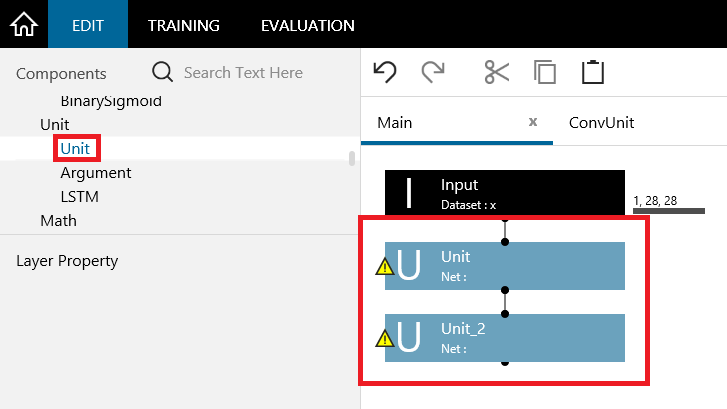

Let’s design a main network that uses the network we defined. To use a defined unit in a network, we use the Unit layer in the Unit category.

Click the main network to start editing it. In the case of LeNet, the ConvUnit we defined are used twice in the beginning of the network, so we insert two Unit layers after the Input layer.

Inserting Unit layers in the main network

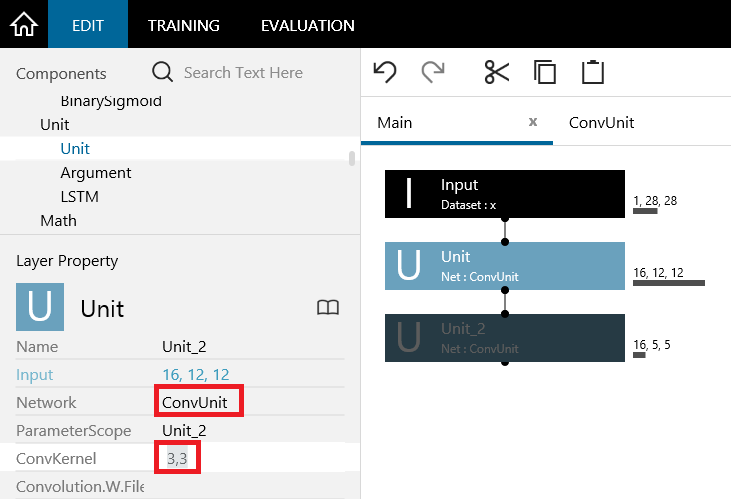

Immediately after the Unit layers are inserted, warnings appear as shown above because there is no indication of which units these Unit layers are to use. The Unit layers will function properly when you select the Unit layers and set the Network properties to the name of the unit (network structure name) that was defined. This time, set the Network properties of the two added layers to “ConvUnit”.

Editing the Unit layer property

In addition, the parameters that were defined in the unit appears under the Unit’s Layer Property. This time, you can see the ConvKernel parameter that was defined using the Argument layer for ConvUnit earlier as a Unit layer property. This means that by changing the parameter appearing in the unit layer property, you can specify the parameter to pass to the unit. In this example, because you want to set KernelShape of the second convolution to 3,3, set the ConvKernel property appearing in the second unit to “3,3”.

Then, you can add the Affine, ReLU, Affine, Softmax, and CategoricalCrossEntropy layers after these two units to complete LeNet.

5. Various applications using the unit function

The unit function can be used for other applications in addition to the application shown above.

Defining original layers

You can create original layers by combining basic layers, such as Math and Arithmetic layers, and define them as units.

By calling the layers you define using Unit layers, you can use the layers as though they were available in Neural Network Console from the beginning.

Define the shared sections of networks that differ between training, evaluation, and inference

If you want to use different loss functions or apply different preprocessing for training, evaluation, and inference, you can express the sections that are different as individual networks and use a unit for the shared section.

Because the shared section will be defined in one location, it will be easy to maintain.

6. Exporting the Python code of networks that use units

You can export source codes that can be used from Neural Network Libraries also for networks defined using units by clicking Export, Python Code(NNabla) on the shortcut menu that appears when you right-click the EDIT tab.

The following example shows an export of LeNet defined earlier.

import nnabla as nn

import nnabla.functions as F

import nnabla.parametric_functions as PF

def network(x, y, test=False):

# Input:x -> 1,28,28

# ConvUnit -> 16,12,12

with nn.parameter_scope('ConvUnit'):

h = network_ConvUnit(x, (5,5))

# ConvUnit_2 -> 16,5,5

with nn.parameter_scope('ConvUnit_2'):

h = network_ConvUnit(h, (3,3))

# Affine -> 100

h = PF.affine(h, (100,), name='Affine')

# ReLU_3

h = F.relu(h, True)

# Affine_2 -> 10

h = PF.affine(h, (10,), name='Affine_2')

# Softmax

h = F.softmax(h)

# CategoricalCrossEntropy -> 1

h = F.categorical_cross_entropy(h, y)

return h

def network_ConvUnit(x, ConvKernel, test=False):

# ConvInput:x -> 1,28,28

# Convolution -> 16,24,24

h = PF.convolution(x, 16, ConvKernel, (0,0), name='Convolution')

# MaxPooling -> 16,12,12

h = F.max_pooling(h, (2,2), (2,2))

# ReLU

h = F.relu(h, True)

return h

|

Export example of Python code for Neural Network Libraries of a project using the unit function

This is example shows that a network_ConvUnit function corresponding to ConvUnit is exported separately from the network function. The network_ConvUnit function is used within the network function. Further, the network_ConvUnit function receives ConvKernel as a parameter. This parameter is provided when network_ConvUnit is called within the network function.