Neural networks that have been trained on Neural Network Console can be executed only using the open source Neural Network Libraries (without using Neural Network Console). This tutorial explains two methods of executing inference on neural networks that have been trained on Neural Network Console. One method uses the command line interface of Neural Network Libraries. The other method uses the Python API.

1. Method of executing inference using the command line interface

Inference using the command line interface is the easiest way to execute inference. It require no coding. The disadvantage, however, is that the execution speed is slow, because for every execution, Python needs to be started, a trained network must be configured, and parameters and the target classification dataset need to be loaded.

The command line interface is suitable for applications where performance is not important or experimental applications.

1.1 Obtaining a trained neural network

First, prepare a trained neural network using Neural Network Console.

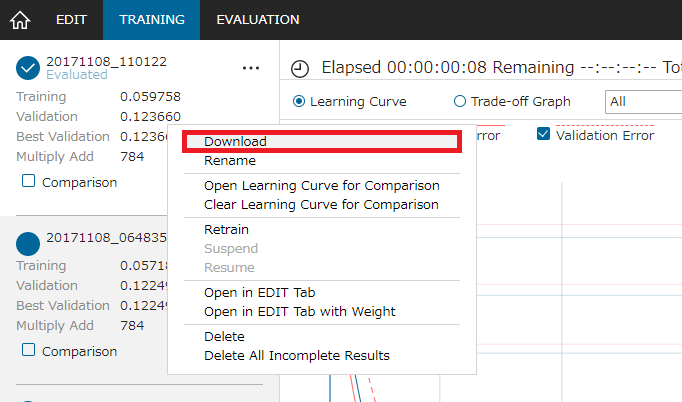

If a neural network has been trained using the Neural Network Console Cloud version, on the training result list on the left of the TRAINING or EVALUATION tab, right-click the training result you want to obtain, and from the shortcut menu that appears, click Download. This will allow you to obtain a result.nnp file containing the neural network structure and trained parameters.

Obtaining a trained neural network from the Cloud version

If a neural network has been trained using the Neural Network Console Windows version, on the training result list on the left of the TRAINING or EVALUATION tab, double-click the training result you want to obtain. Or right-click the training result, and from the shortcut menu that appears, click Open Result Location.

A folder containing the training results will open, and you will see the following two files.

| File name | Description |

| net.nntxt | Neural network structure |

| results.nnp | Structure and trained parameters of the neural network |

Files composing a Windows version trained neural network

1.2 Preparing an environment for using Neural Network Libraries

Install Neural Network Libraries in a PC of your choice (the PC does not need to have Neural Network Console installed). For details on how to install Neural Network Libraries, see the following document.

http://nnabla.readthedocs.io/en/latest/python/installation.html

When the installation is complete, execute from Python the following CLI command, which is included in the Neural Network Libraries package.

$ python {Neural Network Libraries Installation Path}/nnabla/utils/cli/cli.py forward

2017-xx-xx xx:xx:xx,xxx [nnabla][INFO]: Initializing CPU extension...

usage: cli.py forward [-h] -c CONFIG [-p PARAM] [-d DATASET] -o OUTDIR

cli.py forward: error: the following arguments are required: -c/--config, -o/--outdir

When the above response appears, the preparation for using CLI is complete.

If you are using the Neural Network Console Windows version, the included Python environment can be used.

Set the environment variables according to the Environment Variable settings on the ENGINE tab of the setup window. Specifically, open the command prompt, and input the following two commands. Replace {Neural Network Console Installation Path} with the actual path where Neural Network Console is installed.

SET PYTHONPATH={Neural Network Console Installation Path}\libs\nnabla\python\src

SET PATH={Neural Network Console Installation Path}\libs\nnabla\python\src\nnabla;{Neural Network Console Installation Path}\libs\Miniconda3; {Neural Network Console Installation Path}\libs\Miniconda3\Scripts

Execute Python as follows, and check that Neural Network Libraries can be imported.

> python

Python 3.6.1 |Continuum Analytics, Inc.| (default, May 11 2017, 13:25:24) [MSC v.1900 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import nnabla

2017-xx-xx xx:xx:xx,xxx [nnabla][INFO]: Initializing CPU extension...

>>>

Next, execute from Python the following CLI command, which is included in the Neural Network Libraries package that comes with Neural Network Console.

> python {Neural Network Console Installation Path}\libs\nnabla\python\src\nnabla\utils\cli\cli.py forward

2017-xx-xx xx:xx:xx,xxx [nnabla][INFO]: Initializing CPU extension...

usage: cli.py forward [-h] -c CONFIG [-p PARAM] [-d DATASET] -o OUTDIR

cli.py forward: error: the following arguments are required: -c/--config, -o/--outdir</td>

When the above response appears, the preparation is complete for using CLI using the Neural Network Libraries that comes with the Neural Network Console Windows version.

1.3?Executing inference using the command line interface

An inference can be executed by calling CLI of Neural Network Libraries with the following parameters.

python {CLI Path}/cli.py forward

-c Network configulation file (results.nnp)

-d Dataset CSV file of input data

-o Inference result output folder</td>

“forward” that comes after cli.py specifies the execution of inference.

Use the -c option to specify the network structure and parameter file “results.nnp”.

Use the -d option to specify the name of the dataset CSV file to perform inference on. The format of the dataset CSV file specified here is the same as that used on Neural Network Console. The dataset CSV file specified with the -d option only needs to include the input data to be used in the inference (for example, in the case of a neural network that estimates label y from image x, label y does not need to be included). To quickly check the operation, you can use a validation dataset.

Use the -o option to specify the output folder. The execution results of inference performed on the dataset CSV file specified with the -d option are saved in a file named result.csv in the folder specified with the -o option.

The following is an example of executing an inference on a training result of the 01_logistic_regression sample project from the command line interface using the Neural Network Libraries included with Neural Network Console that is installed in c:\neural_network_console. The c:\tmp folder specified as the output folder must be created in advance.

> python C:\neural_network_console\libs\nnabla\python\src\nnabla\utils\cli\cli.py forward -c C:\neural_network_console\samples\sample_project\tutorial\basics\01_logistic_regression.files\20171021_151530\results.nnp -d C:\neural_network_console\samples\sample_dataset\MNIST\small_mnist_4or9_test.csv -o C:\tmp\

2017-xx-xx xx:xx:xx,xxx [nnabla][INFO]: Initializing CPU extension...

...

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 64 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 128 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 192 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 256 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 320 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 384 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 448 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: data 500 / 500

2017-xx-xx xx:xx:xx,xxx [nnabla] [Level 99]: Forward Completed.</td>

If you try to execute inference on a neural network trained with a GPU in an environment in which only a CPU is available, an error occurs because a GPU is not available. If this occurs, you can execute it by opening net.nntxt with a text editor and editing global_config at the top as follows:

global_config {

default_context {

backend: "cpu"

array_class: "CpuCachedArray"

}

}

When using the Neural Network Console Cloud version, change the extension of the result.nnp file to zip, unzip the file, make the same edit in the .nntxt file included in result.nnp, and re-zip the file for use.

2. Executing inference using the Python API

Inference using the Python API is the most standard way of executing inference using the Neural Network Libraries. It allows the rich functions of the Neural Network Libraries to be used directly and excels in terms of how easy it is to link with other programs and in terms of performance.

2.1 Obtaining a trained neural network and preparing an environment for using Neural Network Libraries

By following the same procedure as when executing inference using the command line interface, obtain a trained neural network and prepare an environment for using Neural Network Libraries.

2.2 Obtaining the Python code for the network to execute inference on

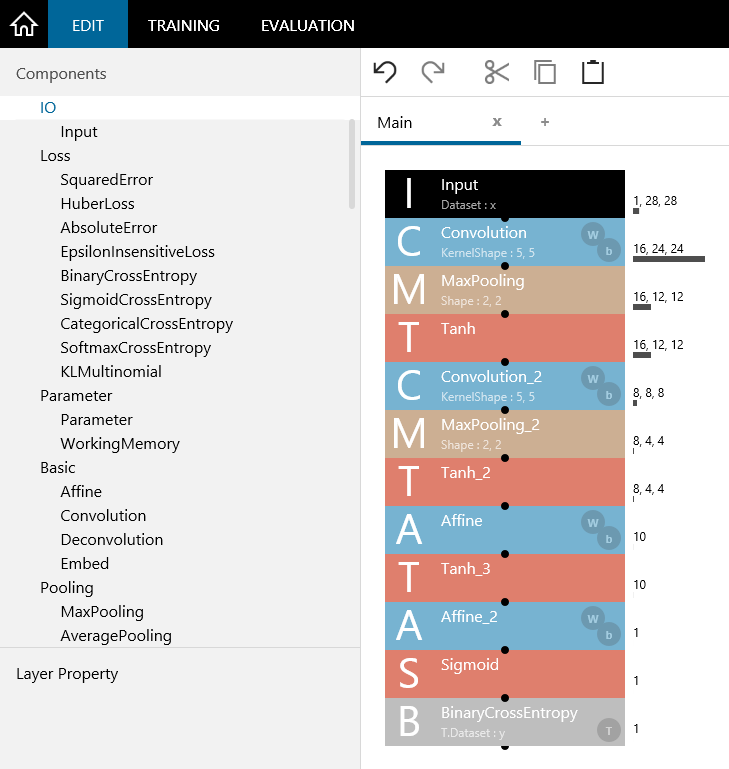

On the Neural Network Console Windows version, open the project containing the network you want to execute inference on. Here, 02_binary_cnn.sdcproj will be used as an example.

02_binary_cnn.sdcproj sample project

Edit the neural network that was used for training, and create a neural network for inference. In the case of 02_binary_cnn, the layer for determining the loss is not needed for inference, so delete the last BinaryCrossEntropy layer.

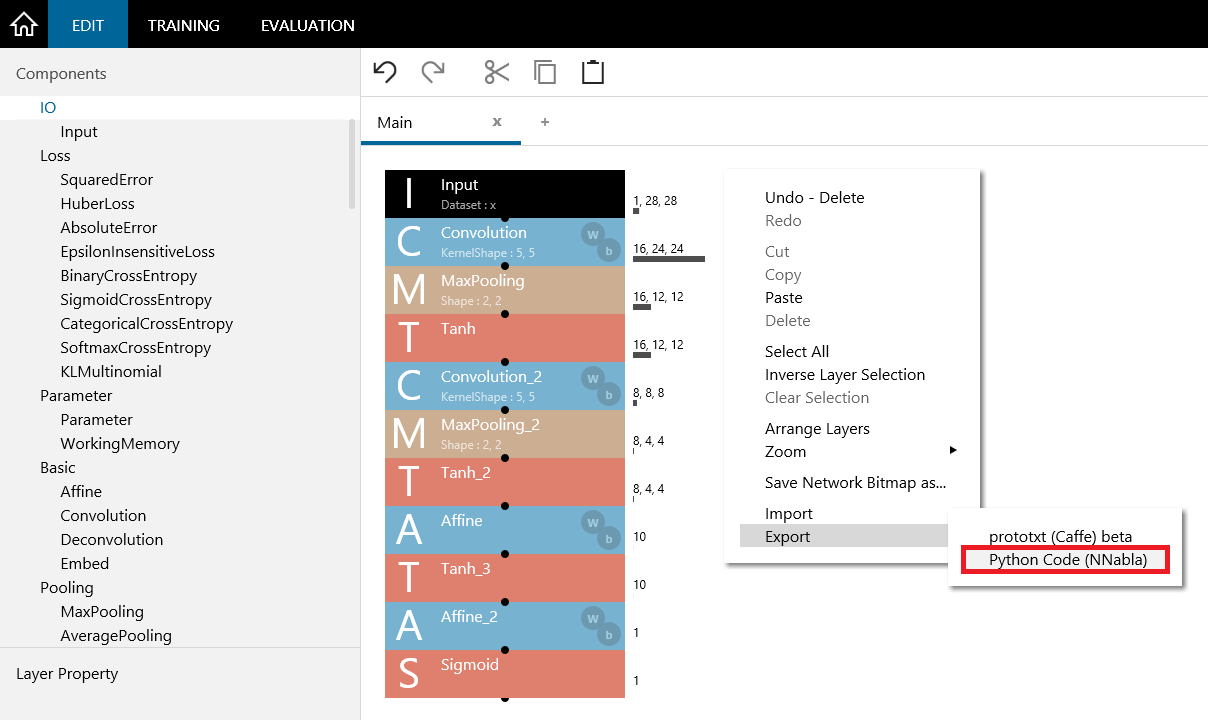

When the design of the neural network for inference is complete, right-click the network to open a shortcut menu, and click Export and then Python Code (NNabla).

Deleting the loss layer and clicking Export and then Python Code (NNabla)

The code for defining the network being edited using the Neural Network Libraries is copied to the clipboard.

import nnabla as nn

import nnabla.functions as F

import nnabla.parametric_functions as PF

def network(x, test=False):

# Input -> 1,28,28

# Convolution -> 16,24,24

with nn.parameter_scope('Convolution'):

h = PF.convolution(x, 16, (5,5), (0,0))

# MaxPooling -> 16,12,12

h = F.max_pooling(h, (2,2), (2,2), True)

# Tanh

h = F.tanh(h)

# Convolution_2 -> 8,8,8

with nn.parameter_scope('Convolution_2'):

h = PF.convolution(h, 8, (5,5), (0,0))

# MaxPooling_2 -> 8,4,4

h = F.max_pooling(h, (2,2), (2,2), True)

# Tanh_2

h = F.tanh(h)

# Affine -> 10

with nn.parameter_scope('Affine'):

h = PF.affine(h, (10,))

# Tanh_3

h = F.tanh(h)

# Affine_2 -> 1

with nn.parameter_scope('Affine_2'):

h = PF.affine(h, (1,))

# Sigmoid

h = F.sigmoid(h)

return h

2.3 Adding the necessary codes for inference

Here, you will add the necessary codes for inference to the code defining the network.

# load parameters

nn.load_parameters(‘{training result path}/results.nnp’)

# Prepare input variable

x=nn.Variable((1,1,28,28))

# Let input data to x.d

# x.d = ...

x.data.zero()

# Build network for inference

y = network(x, test=True)

# Execute inference

y.forward()

print(y.d)

First, load the training result parameter file using nn.load_parameters. Specify the path to the results.nnp file.

Next, create a variable for data input. Set the size of the variable to the size of the number of images and data that inference will be performed on simultaneously. In this example, inference will be performed for each image. Because 02_binary_cnn contains images whose input data size is 1,28,28, create variable x with size (1,1,28,28).

Next, input data into the created Neural Network Libraries’ variable x. A Neural Network Libraries’ variable can be handled as a NumPy array by using “.d”. Here, to quickly check the operation, specify x.data.zero() to fill the content of x with zeros.

The following code uses a Python code exported from Neural Network Console to configure a network that inputs x and determines y. The parameter test=True specifies that a network for executing inference will be used.

Finally, execute y.forward() to execute a forward calculation for inference. The inference results are stored in variable y, so you can refer to y.d to obtain the results.

2.4?Executing the created code for inference from Python

The following shows the result when the above Python code is saved in test.py and executed.

> python test.py

2017-xx-xx xx:xx:xx,xxx [nnabla][INFO]: Initializing CPU extension...

[[ 0.10899857]]

You can see that the classification result (0.1089…) has been output for input x created with x.data.zero(). For a further detailed tutorial of the Neural Network Libraries Python API, see the following:

http://nnabla.readthedocs.io/en/latest/python/tutorial.html

2.5?Notes on executing a code for inference from Python

Before image data is input into x.d, it may be necessary to normalize the image. If the Image Normalization (1.0/255.0) check box on the DATASET tab was selected when training was performed on Neural Network Console, divide the pixel brightness values ranging from 0 to 255 loaded from the file by 255.0 to scale the values in the range of 0.0 to 1.0, and then substitute them into x.d.

When handling a color image, the image loaded using Python’s scipy.misc.imread or the like has a (height, width, 3) size, but to substitute into x.d, the size has to be reordered to (3, height, width) using numpy.transpose or the like. Specifically, change the order of the tensor dimensions as follows:

from scipy.misc import imread

im = scipy.misc.imread(ファイル名).transpose(2, 0, 1)

x=nn.Variable((1, ) + im.shape)

x.d = im.reshape(x.shape)