Neural Network Console provides basic loss functions such as SquaredError, BinaryCrossEntropy, and CategoricalCrossEntropy, as layers.

However, depending on the problem, there are many cases in which you need to optimize using original loss functions.

This tutorial explains how to define your own loss functions that are not available in Neural Network Console and use them in training.

1. Which value is handled as loss?

Before explaining how to define loss functions, let’s review how loss functions are handled on Neural Network Console.

Neural Network Console takes the average of the output values in each final layer for the specified network under Optimizer on the CONFIG tab and then uses the sum of those values to be the loss to be minimized.

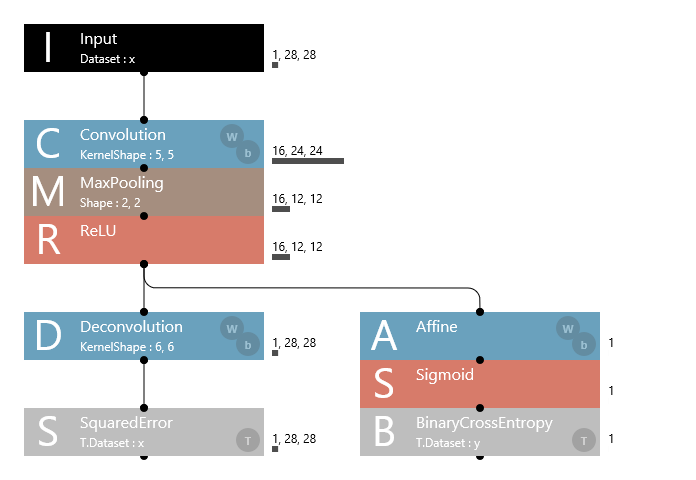

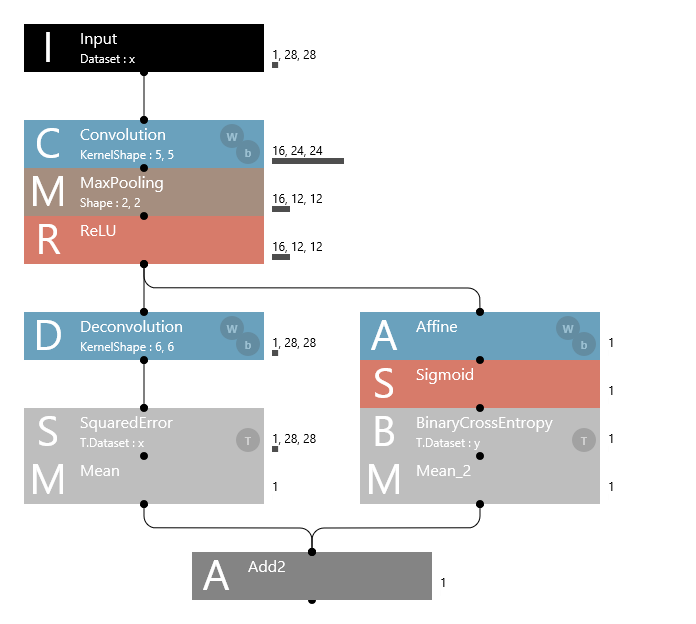

For example, the training behavior is completely the same for network A below, which has multiple final layers, and network B, which takes the average of the output values in the each final layer and takes the sum of those values to yield a single output.

Network A

network B

By using this scheme and designing a network so that the loss value appears at the end of the network, you can handle a variety of original loss functions.

2. Defining a squared error loss

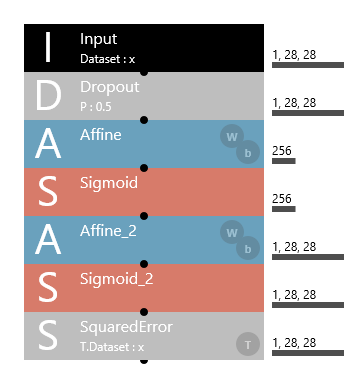

Here, let’s assume that the SquaredError layer is not available in Neural Network Console and define our original squared error loss (without using the SquaredError layer). For this problem, we will use the 06_auto_encoder sample project. This sample project is used to compress input images to lower dimensions and train an auto encoder that reconstructs the original images. The SquaredError layer is used to minimize the squared error between the reconstructed image and input image.

06_auto_encoder sample project

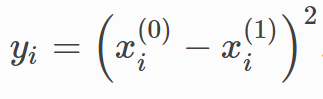

As expressed by the following formula, the squared error loss function calculates the square of each element for two inputs.

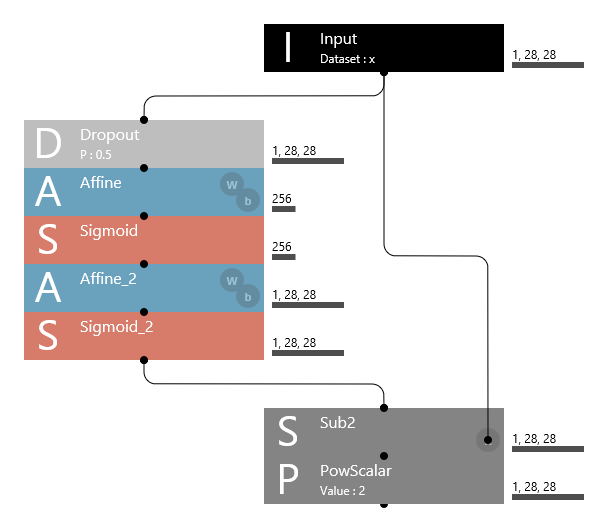

Based on this formula, we will define our original loss function using Math, Arithmetic, and other layers. In 06_auto_encoder, x(0) is the reconstructed image, and x(1) is the input image, so we can express the squared error as follows.

Example in which we define our own SquaredError loss function in the 06_auto_encoder sample

Here, the Sub2 layer is used to calculate the differences between each of the x(0) and x(1) elements and the PowScalar layer to calculate the square of each element.

In this way, Neural Network Console allows you to use various loss functions in addition to those already available by defining formulas using Math, Arithmetic, and other layers.

3. Notes on defining original loss functions

When you define your own loss function, you may need to manually define an inference network.

Based on the network structure defined in the Main network (network named Main), Neural Network Console automatically creates an evaluation network for training (MainValidation) and an inference network (MainRuntime). The MainRuntime network for inference is configured so that the value before the preset loss function included in the Main network is used as the final output. Therefore, if you define an original loss function and the Main network does not include any preset loss functions, the MainRuntime network will not output anything.

In such situations, you can manually design and specify an inference network so that inference can be performed correctly. The procedure to manually design and specify an inference network is shown below.

- On the EDIT tab, click the + button to the right of the Main network tab to add a new network.

- Click the network name (Network_2), and rename it (e.g., “Runtime”).

- Select all the contents of the Main network (Ctrl+A) and copy (Ctrl+C).

- Paste the copied Main network to the Runtime network (Ctrl+V).

- Delete the section corresponding to the loss function from the Runtime network.

- If BatchNormalization is in use, set the BatchStat property of all BatchNormalization layers in the Runtime network to False.

- Connect an Identity layer to the end of the Runtime network (Sigmoid_2 in the case of 06_auto_encoder above), and change the Name property to the output variable name for inference.

- Change Network under Executor on the CONFIG tab from “MainRuntime” to “Runtime”.